Crawl a private network using a web crawler on Elastic Cloud

editCrawl a private network using a web crawler on Elastic Cloud

editSince version 7.16.1, the App Search web crawler can crawl content on a private network if the content is accessible through an HTTP proxy.

This document explains how to configure the web crawler to crawl a private network by walking through a detailed example.

The App Search web crawler is hosted on Elastic Cloud, but has access to protected resources in private networks and other non-public environments.

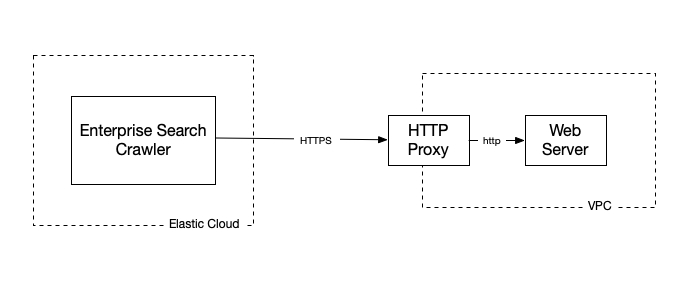

Infrastructure overview

editTo demonstrate the authenticated proxy server functionality for App Search web crawler, we will use the infrastructure outlined in this schematic diagram:

This example has all the components needed to understand the use case:

- A VPC (virtual private network) deployed within Google Cloud

-

The VPC contains two cloud instances:

web-serverandproxy:- The web-server hosts a private website, that is only accessible from within the VPC, using a private domain name: http://marlin-docs.internal.

-

The proxy server has basic HTTP authentication set up with the username

proxyuserand passwordproxypass. It is accessible from the public internet using the host nameproxy.example.comand the port number3128.

-

An Enterprise Search deployment running outside of the VPC, in Elastic Cloud.

- This deployment has no access to the private network hosting our content.

App Search web crawler supports both HTTP and HTTPS. However, we recommend configuring TLS to protect your content and proxy server credentials.

Refer to your proxy server software documentation for more details on how to set this up.

Testing proxy connections

editBefore changing your Enterprise Search deployment configuration to use the HTTP proxy described above, first make sure the proxy works and allows access to the private website.

You can configure your web browser to use the proxy and then try to access the private website. Alternatively, use the following command to fetch the home page from the site using a given proxy:

curl --head --proxy https://proxyuser:proxypass@proxy.example.com http://marlin-docs.internal

This request makes a HEAD request to the website, using the proxy.

The response should be an HTTP 200 with a set of additional headers.

Here is an example response:

HTTP/1.1 200 OK Content-Type: text/html Content-Length: 42337 Accept-Ranges: bytes Server: nginx/1.14.2 Date: Tue, 30 Nov 2021 19:19:14 GMT Last-Modified: Tue, 30 Nov 2021 17:57:39 GMT ETag: "61a66613-a561" Age: 4 X-Cache: HIT from test-proxy X-Cache-Lookup: HIT from test-proxy:3128 Via: 1.1 test-proxy (squid/4.6) Connection: keep-alive

We have confirmed that our proxy credentials and connection parameters are correct, and can now change the Enterprise Search configuration.

Configuring Enterprise Search

editTo prepare our Enterprise Search deployment to use the HTTP proxy for all {crawler }operations, we need to add the following user settings:

crawler.http.proxy.host: proxy.example.com crawler.http.proxy.port: 3128 crawler.http.proxy.protocol: https crawler.http.proxy.username: proxyuser crawler.http.proxy.password: proxypass

When this configuration is added, the deployment will perform a graceful restart. You can find detailed instructions on how to work with custom configurations in our official Elastic Cloud documentation.

HTTP proxy with basic authentication requires an appropriate Elastic subscription level. Refer to the Elastic subscriptions pages for Elastic Cloud and self-managed deployments.

Testing the solution

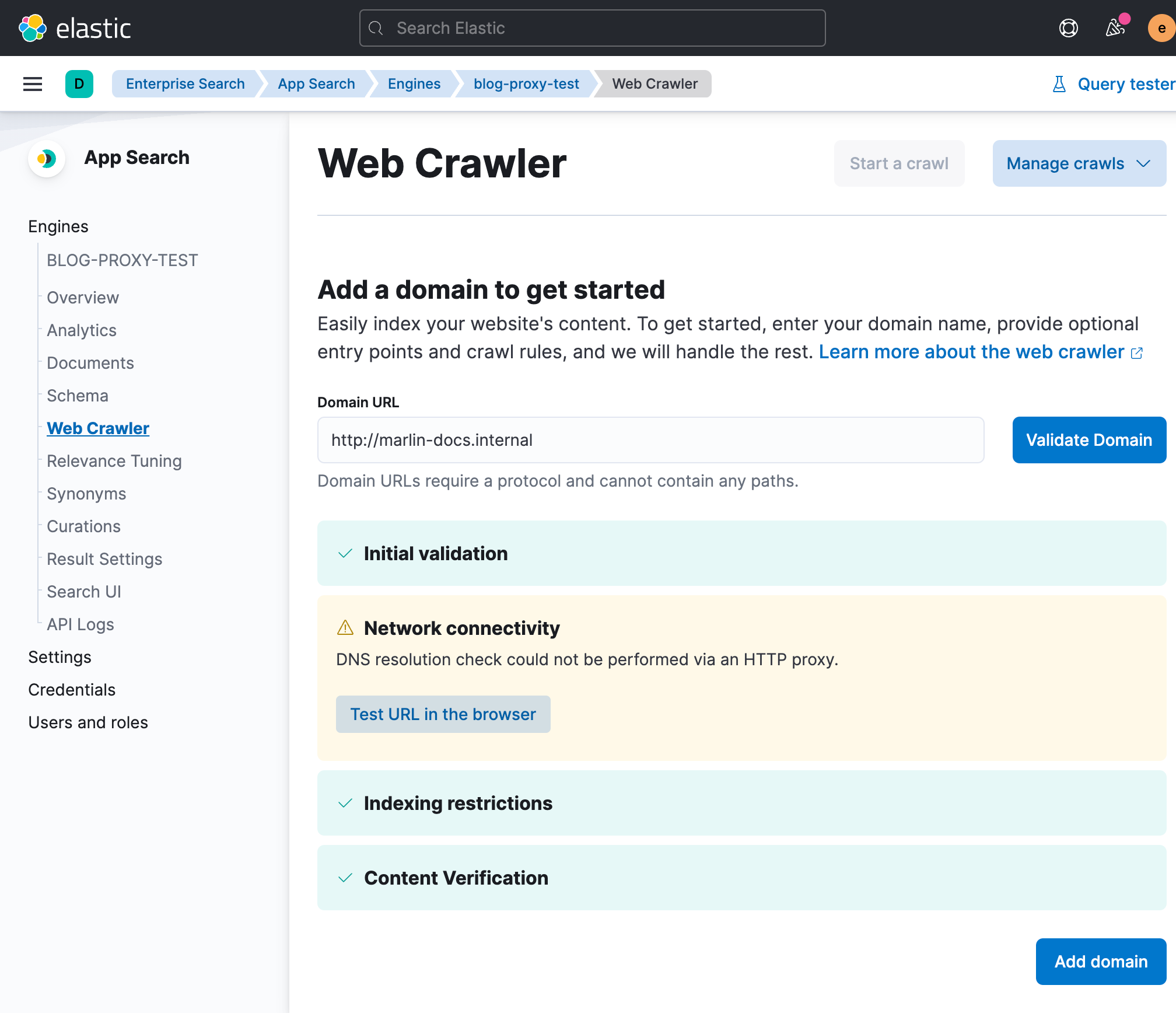

editWith the configuration complete, we can now test the solution. Create an App Search engine and use the Web Crawler feature to ingest content into the engine:

The validation process for adding a domain to the App Search web crawler configuration will skip a number of networking-related checks, since these do not work through a proxy. If you do not see the warning in the above image, check your deployment configuration to ensure you have configured the proxy correctly.

Our private domain is now added to the configuration. We can start the crawl, and the content should be ingested into the deployment. If there are failures, check your crawler logs and proxy server’s logs for clues about what might be going wrong.

If you are using a Squid proxy server, the logs should look like this:

1638298043.202 1 99.250.74.78 TCP_MISS/200 65694 GET http://marlin-docs.internal/docs/gcode/M951.html proxyuser HIER_DIRECT/10.188.0.2 text/html 1638298045.286 1 99.250.74.78 TCP_MISS/200 64730 GET http://marlin-docs.internal/docs/gcode/M997.html proxyuser HIER_DIRECT/10.188.0.2 text/html 1638298045.373 1 99.250.74.78 TCP_MISS/200 63609 GET http://marlin-docs.internal/docs/gcode/M999.html proxyuser HIER_DIRECT/10.188.0.2 text/html