Web crawler reference

editWeb crawler reference

editSee Web crawler for a guided introduction to the App Search web crawler.

Refer to the following sections for terms, definitions, tables, and other detailed information.

- Crawl

- Content inclusion and exclusion

- Content deletion

- Domain

- Entry point

- Canonical URL link tag

- Robots meta tags

- Meta tags and data attributes to extract custom fields

- Nofollow link

- Crawl status

- Partial crawl

- Process crawl

- HTTP authentication

- HTTP proxy

- Web crawler schema

- Web crawler events logs

- Web crawler configuration settings

Crawl

editA crawl is a process, associated with an engine, by which the web crawler discovers web content, and extracts and indexes that content into the engine as search documents.

During a crawl, the web crawler stays within user-defined domains, starting from specific entry points, and it discovers additional content according to crawl rules. Operators and administrators manage crawls through the App Search dashboard.

Each crawl also respects instructions within robots.txt files and sitemaps, and instructions embedded within HTML files using canonical link tags, robots meta tags, and nofollow links.

Operators can monitor crawls using the ID and status of each crawl, and using the web crawler events logs.

Each engine may run one active crawl at a time, but multiple engines can run crawls concurrently.

By default, each re-crawl is a full crawl. Partial crawls may be requested through the API.

Content discovery

editTo begin each crawl, the web crawler populates a crawl queue with the entry points for the crawl and the URLs provided by any sitemaps. It then begins fetching and parsing each page in the queue. The crawler handles each page according to its HTTP response status code.

As the web crawler discovers additional URLs, it uses the crawl rules from the crawl configuration, the directives from any robots.txt files, and instructions embedded within the content to determine which of those URLs it is allowed to crawl. The crawler adds the allowed URLs to the crawl queue.

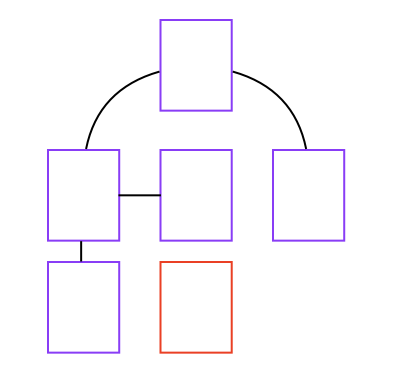

If a page is not linked from other pages, the web crawler will not discover it. Refer to the web of pages in the following image. The crawler will not discover the page outlined in red, unless an admin adds its URL as an entry point for the domain or includes the URL in a sitemap:

The web crawler continues fetching and adding to the crawl queue until the URL queue is empty, the crawler hits a resource limit, or the crawl fails unexpectedly.

The crawler logs detailed events while it crawls, which allow monitoring and auditing of the content discovery process.

Crawl state

editEach crawl persists its state to Elasticsearch, allowing the crawl to resume after a restart or shutdown.

The persisted data includes:

- Crawl URL queue: A list of all outstanding URLs the crawler needs to visit before the end of the crawl.

- Seen URLs list: A list of all unique URLs the crawler has seen during the crawl. The crawler uses this list to avoid visiting the same page twice.

Crawl state indexes are automatically removed at the end of each crawl. The name of each of these indexes includes the ID of the corresponding crawl request. You can use this information to clean up disk space if the automated cleanup process fails for any reason.

Depending on the size of your site, crawl state indexes may become large. Each page you have will generate a record in at least one of those indexes, meaning sites with millions of pages will generate indexes with millions of records.

Monitor disk space on your Elasticsearch clusters, and ensure your deployment has enough disk space to avoid stability issues.

Content extraction and indexing

editContent extraction is the process where the web crawler transforms a web (HTML or binary) document into a search document. And indexing is the process where the crawler puts the search document into an App Search engine for searching.

During content extraction, the web crawler handles duplicate documents. The crawler indexes duplicate web documents as a single search document.

The web crawler extracts each web document into a predefined schema before indexing it as a search document. Custom field extraction is supported via meta tags and attributes.

During a crawl, the web crawler uses HTTP response status codes and robots meta tags to determine which documents it is allowed to index.

Binary content extraction

editBinary content extraction is a technical preview feature. Technical preview features are subject to change and are not covered by the support SLA of general release (GA) features. Elastic plans to promote this feature to GA in a future release.

Since 8.3.0, the web crawler can extract content from downloadable binary files, such as PDF and DOCX files. To use this feature, you must:

- Install the Ingest Attachment Processor plugin on all nodes of your Elasticsearch cluster.

-

Enable binary content extraction with the configuration:

crawler.content_extraction.enabled: true. -

Select which MIME types should have their contents extracted. For example:

crawler.content_extraction.mime_types: ["application/pdf", "application/msword"].-

The MIME type is determined by the HTTP response’s

Content-Typeheader when downloading a given file. - While intended primarily for PDF and Microsoft Office formats, you can use any of the supported formats documented by Apache Tika.

-

No default

mime_typesare defined. You must configure at least one MIME type in order to extract non-HTML content.

-

The MIME type is determined by the HTTP response’s

The ingest attachment processor does not support compressed files, e.g., an archive file containing a set of PDFs. Expand the archive file and make individual uncompressed files available for the web crawler to process.

Enterprise Search uses an Elasticsearch ingest pipeline

to power the web crawler’s binary content extraction.

This pipeline, named ent_search_crawler, is automatically created when Enterprise Search first starts if:

- the Ingest Attachment Processor plugin has been installed on your Elasticsearch cluster

- Enterprise Search is configured to enable binary content extraction

You can view and update this pipeline in Kibana or with Elasticsearch APIs.

If you make changes to the default ent_search_crawler ingest pipeline, these will not be overwritten when you upgrade Enterprise Search.

Therefore, we recommend comparing the default ent_search_crawler pipeline that comes with the newer version — to determine if you need to

incorporate the latest changes into your customized pipeline.

Duplicate document handling

editBy default, the web crawler identifies groups of duplicate web documents and stores each group as a single App Search document within your engine.

Within the App Search document, the fields url and additional_urls represent all the URLs where the web crawler discovered the document’s content (or a sample of URLs if more than 100).

The url field represents the canonical URL, which you can explicitly manage using canonical URL link tags.

The crawler identifies duplicate content intelligently, ignoring insignificant differences such as navigation, whitespace, style, and scripts. More specifically, the crawler combines the values of specific fields, and it hashes the result to create a unique "fingerprint" to represent the content of the web document.

The web crawler then checks your engine for an existing document with the same content hash. If it doesn’t find one, it saves a new document to the engine. If it does exist, the crawler updates the existing document instead of saving a new one. The crawler adds to the document the additional URL at which the content was discovered.

You can manage which fields the web crawler uses to create the content hash. You can also disable this feature and allow duplicate documents.

Manage these settings for each domain within the web crawler UI. See Manage duplicate document handling.

Set the default fields for all domains using the following configuration setting: crawler.extraction.default_deduplication_fields.

Content inclusion and exclusion

editInject HTML data attributes into your web pages to instruct the web crawler to include or exclude particular sections from extracted content. For example, use this feature to exclude navigation and footer content when crawling, or to exclude sections of content only intended for screen readers.

For all pages that contain HTML tags with a data-elastic-include attribute, the crawler will only index content within those tags.

For all pages that contain HTML tags with a data-elastic-exclude attribute, the crawler will skip those tags from content extraction. You can nest data-elastic-include and data-elastic-exclude tags, too.

The web crawler will still crawl any links that appear inside excluded sections as long as the configured crawl rules allow them.

A simple content exclusion rule example:

<body> <p>This is your page content, which will be indexed by the App Search web crawler. <div data-elastic-exclude>Content in this div will be excluded from the search index</div> </body>

In this more complex example with nested exclusion and inclusion rules, the web crawler will only extract "test1 test3 test5 test7" from the page.

<body>

test1

<div data-elastic-exclude>

test2

<p data-elastic-include>

test3

<span data-elastic-exclude>

test4

<span data-elastic-include>test5</span>

</span>

</p>

test6

</div>

test7

</body>

Content deletion

editThe web crawler must also delete documents from an engine to keep its documents in sync with the corresponding web content.

During a crawl, the web crawler uses HTTP response status codes to determine which documents to delete. However, this process cannot delete stale documents in the engine that are no longer linked to on the web.

Therefore, at the conclusion of each crawl, the web crawler begins an additional "purge" crawl, which fetches URLs that exist in the engine, but have not been seen on the web during recent crawls.

The crawler deletes from the engine all documents that respond with 4xx and 3xx responses during the phase.

You can purge documents on demand by using a process crawl. A process crawl removes from your engine all documents that are no longer allowed by your crawl rules.

Domain

editA domain is a website or other internet realm that is the target of a crawl. Each domain belongs to a crawl, and each crawl has one or more domains.

See Manage domains to manage domains for a crawl.

A crawl always stays within its domains when discovering content. It cannot discover and index content outside of its domains.

Each domain has a domain URL that identifies the domain using a protocol and hostname. The domain URL must not include a path.

Each unique combination of protocol and hostname is a separate domain. Each of the following is its own domain:

-

http://example.com -

https://example.com -

http://www.example.com -

https://www.example.com -

http://shop.example.com -

https://shop.example.com

Each domain has:

- One or more entry points

- One or more crawl rules

- Zero or one robots.txt files

- Zero or more sitemaps

Entry point

editAn entry point is a path within a domain that serves as a starting point for a crawl. Each entry point belongs to a domain, and each domain has one or more entry points.

See Manage entry points to manage entry points for a domain.

Use entry points to instruct the crawler to fetch URLs it would otherwise not discover. For example, if you’d like to index a page that isn’t linked from other pages, add the page’s URL as an entry point.

Ensure the entry points for each domain are allowed by the domain’s crawl rules, and the directives within the domain’s robots.txt file. The web crawler will not fetch entry points that are disallowed by crawl rules or robots.txt directives.

You can also inform the crawler of URLs using sitemaps.

Sitemap

editA sitemap is an XML file, associated with a domain, that informs web crawlers about pages within that domain. XML elements within the sitemap identify specific URLs that are available for crawling.

These elements are similar to entry points. You can therefore choose to submit URLs to the App Search web crawler using sitemaps, entry points, or a combination of both.

You may prefer using sitemaps over entry points for any of the following reasons:

- You have already been publishing sitemaps for other web crawlers.

- You don’t have access to the web crawler UI.

- You prefer the sitemap file interface over the web crawler web interface.

Use sitemaps to inform the web crawler of pages you think are important, or pages that are isolated and not linked from other pages. However, be aware the web crawler will visit only those pages from the sitemap that are allowed by the domain’s crawl rules and robots.txt file directives.

Sitemap discovery and management

editTo add a sitemap to a domain, you can specify it within a robots.txt file. At the start of each crawl, the web crawler fetches and processes each domain’s robots.txt file and each sitemap specified within those robots.txt files.

See Manage sitemaps to manage sitemaps for your domains.

Sitemap format and technical specification

editThe sitemaps standard defines the format and technical specification for sitemaps. Refer to the standard for the required and optional elements, character escaping, and other technical considerations and examples.

The App Search web crawler does not process optional meta data defined by the standard. The crawler extracts a list of URLs from each sitemap and ignores all other information.

Ensure each URL within your sitemap matches the exact domain (here defined as scheme + host + port) for your site.

Different subdomains (like www.example.com and blog.example.com) and different schemes (like http://example.com and https://example.com) require separate sitemaps.

The App Search web crawler also supports sitemap index files. Refer to Using sitemap index files within the sitemap standard for sitemap index file details and examples.

Crawl rule

editA crawl rule is a crawler instruction to allow or disallow specific paths within a domain. Each crawl rule belongs to a domain, and each domain has one or more crawl rules.

See Manage crawl rules to manage crawl rules for a domain. After modifying your crawl rules, you can re-apply the rules to your existing documents without waiting for a full re-crawl. See Process crawl.

During content discovery, the web crawler discovers new URLs and must determine which it is allowed to follow.

Each URL has a domain (e.g. https://example.com) and a path (e.g. /category/clothing or /c/Credit_Center).

The web crawler looks up the crawl rules for the domain, and applies the path to the crawl rules to determine if the path is allowed or disallowed. The crawler evaluates the crawl rules in order. The first matching crawl rule determines the policy for the newly discovered URL.

Each crawl rule has a path pattern, a rule, and a policy. To evaluate each rule, the web crawler compares a newly discovered path to the path pattern, using the logic represented by the rule, resulting in a policy.

The policy for each URL is also affected by directives in robots.txt files. The web crawler will crawl only those URLs that are allowed by crawl rules and robots.txt directives.

Crawl rule logic (rules)

editThe logic for each rule is as follows:

- Begins with

-

The path pattern is a literal string except for the character

*, which is a meta character that will match anything.The rule matches when the path pattern matches the beginning of the path (which always begins with

/).If using this rule, begin your path pattern with

/. - Ends with

-

The path pattern is a literal string except for the character

*, which is a meta character that will match anything.The rule matches when the path pattern matches the end of the path.

- Contains

-

The path pattern is a literal string except for the character

*, which is a meta character that will match anything.The rule matches when the path pattern matches anywhere within the path.

- Regex

-

The path pattern is a regular expression compatible with the Ruby language regular expression engine. In addition to literal characters, the path pattern may include metacharacters, character classes, and repetitions. You can test Ruby regular expressions using Rubular.

The rule matches when the path pattern matches the beginning of the path (which always begins with

/).If using this rule, begin your path pattern with

\/or a metacharacter or character class that matches/.

Crawl rule matching

editThe following table provides examples of crawl rule matching:

| URL path | Rule | Path pattern | Match? |

|---|---|---|---|

|

Begins with |

|

YES |

|

Begins with |

|

YES |

|

Begins with |

|

NO |

|

Begins with |

|

NO |

|

Ends |

|

YES |

|

Ends |

|

YES |

|

Ends |

|

NO |

|

Ends |

|

NO |

|

Contains |

|

YES |

|

Contains |

|

NO |

|

Regex |

|

YES |

|

Regex |

|

NO |

|

Regex |

|

NO |

Crawl rule order

editThe first crawl rule to match determines the policy for the URL. Therefore, the order of the crawl rules is significant.

The following table demonstrates how crawl rule order affects the resulting policy for a path:

| Path | Crawl rules | Resulting policy |

|---|---|---|

|

1. 2. |

DISALLOW |

|

1. 2. |

ALLOW |

Restricting paths using crawl rules

editThe domain dashboard adds a default crawl rule to each domain: Allow if Regex .*.

You cannot delete or re-order this rule through the dashboard.

This rule is permissive, allowing all paths within the domain. To restrict paths, use either of the following techniques:

Add rules that disallow specific paths (e.g. disallow the blog):

| Policy | Rule | Path pattern |

|---|---|---|

|

|

|

|

|

|

Or, add rules that allow specific paths and disallow all others (e.g. allow only the blog):

| Policy | Rule | Path pattern |

|---|---|---|

|

|

|

|

|

|

|

|

|

When you restrict a crawl to specific paths, be sure to add entry points that allow the crawler to discover those paths.

For example, if your crawl rules restrict the crawler to /blog, add /blog as an entry point.

If you leave only the default entry point of /, the crawl will end immediately, since / is disallowed.

Robots.txt file

editA robots.txt file is a plain text file, associated with a domain, that provides instructions to web crawlers. The instructions within the file, also called directives, communicate which paths within that domain are disallowed (and allowed) for crawling.

The directives within a robots.txt file are similar to crawl rules. You can therefore choose to manage inclusion and exclusion through robots.txt files, crawl rules, or a combination of both. The App Search web crawler will crawl only those paths that are allowed by the crawl rules for the domain and the directives within the robots.txt file for the domain.

You may prefer using a robots.txt file over crawl rules for any of the following reasons:

- You have already been publishing a robots.txt file for other web crawlers.

- You don’t have access to the web crawler UI.

- You prefer the robots.txt file interface over the web crawler web interface.

You can also use a robots.txt file to specify sitemaps for a domain.

Robots.txt file discovery and management

editAt the start of each crawl, the web crawler fetches and processes the robots.txt file for each domain. When fetching a robots.txt file, the crawler handles 2xx, 4xx, and 5xx responses differenty. See Robots.txt file response code handling for an explanation of each response.

See Manage robots.txt files to manage robots.txt files for your domains.

Robots.txt file format and technical specification

editSee Robots exclusion standard on Wikipedia for a summary of robots.txt conventions.

Canonical URL link tag

editA canonical URL link tag is an HTML element you can embed within pages that duplicate the content of other pages. The canonical URL link tag specifies the canonical URL for that content.

The canonical URL is stored on the App Search document in the url field, while the additional_urls field contains other URLs where the crawler discovered the same content.

If your site contains pages that duplicate the content of other pages, use canonical URL link tags to explicitly manage which URL is stored in the url field of the resulting App Search document.

Template:

<link rel="canonical" href="{CANONICAL_URL}">

Example:

<link rel="canonical" href="https://example.com/categories/dresses/starlet-red-medium">

Robots meta tags

editRobots meta tags are HTML elements you can embed within pages to prevent the crawler from following links or indexing content.

Template:

<meta name="robots" content="{DIRECTIVES}">

Supported directives:

-

noindex - The web crawler will not index the page.

-

nofollow -

The web crawler will not follow links from the page (i.e. will not add links to the crawl queue). The web crawler logs a

url_discover_deniedevent for each link.The directive does not prevent the web crawler from indexing the page.

Process crawls do not honor the noindex and nofollow directives.

In versions before 8.3.0, purge crawls also do not remove previously indexed pages that now have noindex and nofollow directives.

To remove obsolete content, you can create crawl rules to exclude the pages and then run a process crawl.

Examples:

<meta name="robots" content="noindex"> <meta name="robots" content="nofollow"> <meta name="robots" content="noindex, nofollow">

Meta tags and data attributes to extract custom fields

editWith meta tags and data attributes you can extract custom fields from your HTML pages.

Template:

<head>

<meta class="elastic" name="{FIELD_NAME}" content="{FIELD_VALUE}">

</head>

<body>

<div data-elastic-name="{FIELD_NAME}">{FIELD_VALUE}</div>

</body>

The crawled document for this example

<head> <meta class="elastic" name="product_price" content="99.99"> </head> <body> <h1 data-elastic-name="product_name">Printer</h1> </body>

will include 2 additional fields.

{

"product_price": "99.99",

"product_name": "Printer"

}

You can specify multiple class="elastic" and data-elastic-name tags.

Template:

<head>

<meta class="elastic" name="{FIELD_NAME_1}" content="{FIELD_VALUE_1}">

<meta class="elastic" name="{FIELD_NAME_2}" content="{FIELD_VALUE_2}">

</head>

<body>

<div data-elastic-name="{FIELD_NAME_1}">{FIELD_VALUE_1}</div>

<div data-elastic-name="{FIELD_NAME_2}">{FIELD_VALUE_2}</div>

</body>

{FIELD_NAME} must conform to the field name rules detailed in the Documents API reference.

Additionally, the web crawler reserves the following fields:

-

additional_urls -

body_content -

domains -

headings -

last_crawled_at -

links -

meta_description -

meta_keywords -

title -

url -

url_host -

url_path -

url_path_dir1 -

url_path_dir2 -

url_path_dir3 -

url_port -

url_scheme

For compatibility with Elastic Site Search, the crawler also supports the class value swiftype on meta elements and the data attribute data-swiftype-name on elements within the body.

Nofollow link

editNofollow links are HTML links that instruct the crawler to not follow the URL.

The web crawler will not follow links that include rel="nofollow" (i.e. will not add links to the crawl queue).

The web crawler logs a url_discover_denied event for each link.

The link does not prevent the web crawler from indexing the page in which it appears.

Template:

<a rel="nofollow" href="{LINK_URL}">{LINK_TEXT}</a>

Example:

<a rel="nofollow" href="/admin/categories">Edit this category</a>

Crawl status

editEach crawl has a status, which quickly communicates its state.

See View crawl status to view the status for a crawl.

All crawl statuses:

- Pending

- The crawl is enqueued and will start after resources are available.

- Starting

- The crawl is starting. You may see this status briefly, while a crawl moves from pending to running.

- Running

- The crawl is running.

- Success

- The crawl completed without error. The web crawler may stop a crawl due to hitting resource limits. Such a crawl will report its status as success, as long as it completes without error.

- Canceling

- The crawl is canceling. You may see this status briefly, after choosing to cancel a crawl.

- Canceled

- The crawl was intentionally canceled and therefore did not complete.

- Failed

- The crawl ended unexpectedly. View the web crawler events logs for a message providing an explanation.

- Skipped

- An automatic crawl was skipped because another crawl was active at the time.

Partial crawl

editA partial crawl is a web crawl that runs with custom configuration to narrow the scope of the crawl. Configuration for a partial crawl is provided at the time of the crawl request, replacing any globally configured settings.

Partial crawls offer the user powerful customization to meet a variety of use cases. Consider an engine with the following domains and entry points:

-

http://www.awesome-recipes.com

-

/quick -

/vegetarian -

/slow-cooker

-

- http://www.awesome-workouts.com

- http://www.awesome-reflections.com

A typical crawl for this engine would crawl all three domains, initiating a url queue with seed URLs:

['http://www.awesome-recipes.com/quick', 'http://www.awesome-recipes.com/vegetarian', 'http://www.awesome-recipes.com/slow-cooker', 'http://www.awesome-workouts.com/', 'http://www.awesome-reflections.com/']

Under the http://www.awesome-workouts.com domain, the crawler discovers a sitemap and adds multiple urls to the queue.

The crawler visits each url in the queue, enqueuing additional urls as it discovers and traverses any available HTML links.

Through this process, the crawler discovers hundreds of web pages, drilling into linked content up to the default depth of 10 traversals.

While running full crawls at regular intervals account for the bulk of this engine’s maintenance, partial crawls allow an administrator to quickly visit and update specific content ad-hoc.

Scenarios include:

- Efficiently update new content linked from the home pages of each domain by requesting a partial crawl with a max depth of 2.

-

Efficiently index new slow cooker recipes by augmenting the above request with a specified entry point of

http://www.awesome-recipes.com/slow-cooker. -

Update content for just the

workoutsdomain while keeping content fromrecipesandreflectionsintact with a single domain partial crawl. - Target precise content updates by setting a max depth of 1 and providing a list of URLs to visit.

- Replicate the above at scale by providing the URLs from within a sitemap.

Partial crawls further streamline a web crawl by skipping the "purge" phase and, in certain cases, ignoring sitemaps defined in robots.txt. Robots.txt sitemaps are ignored by a partial crawl if seed URLs and/or sitemap URLs are included in the partial crawl configuration.

One-time partial crawls can be configured through the UI, but recurring partial crawls should be requested through the API. For a full list of partial crawl capabilities, visit the API reference.

Process crawl

editA process crawl is an operation that re-processes the documents in your engine using the current crawl rules. Essentially, it re-applies your crawl rules to your existing documents, removing the documents whose URLs are no longer allowed by the current crawl rules.

After updating your crawl rules, you can use a process crawl to remove unwanted documents without waiting for a full re-crawl to remove them. You can request process crawls through the web crawler UI or API.

Using the UI, you can request a process crawl for all domains or a specific domain. See Re-apply crawl rules.

Using the API, you can request a process crawl for all domains or a subset of domains. See Process crawls.

It is recommended to cancel any active web crawls before requesting a process crawl. A web crawl that runs concurrently with a process crawl may continue to index fresh documents with out of date configuration; the process crawl will only apply to documents indexed at the time of the request.

Using the API, you can also complete a "dry run" process crawl. When "dry run" is enabled, the process crawl will report which documents would be deleted by a normal process crawl, but it does not delete any documents from your engine. Use a dry run to get quick feedback after modifying crawl rules through the web crawler API. If the results of the dry run include documents you do not want to remove, further modify your crawl rules. Repeat this process until you get the expected results.

HTTP authentication

editThe web crawler can crawl domains protected by HTTP Authentication.

This feature requires an appropriate Elastic subscription level. Refer to the Elastic subscriptions pages for Elastic Cloud and self-managed deployments.

Use the web crawler API to provide the crawler with the information required to crawl each domain.

Create or update each domain with the auth parameter.

Refer to the following API references:

Related: HTTP proxy

HTTP proxy

editThe web crawler can crawl content through an HTTP proxy, with or without Basic authentication.

Refer to the following guide: Crawl a private network using a web crawler on Elastic Cloud.

HTTP proxy with basic authentication requires an appropriate Elastic subscription level. Refer to the Elastic subscriptions pages for Elastic Cloud and self-managed deployments.

Configure Enterprise Search with the necessary information about the HTTP proxy. Refer to the following configuration settings reference: Crawler HTTP proxy settings.

Related: HTTP authentication

HTTP response status codes handling

editThe following sections describe how the web crawler handles HTTP response status codes for HTML documents and robots.txt files.

HTML response code handling

edit| Code | Description | Web crawler behavior |

|---|---|---|

|

Success |

The web crawler extracts and de-duplicates the page’s content into a search document. Then it indexes the document into the engine, replacing an existing document with the same content if present. |

|

Redirection |

The web crawler follows all redirects within configured domains recursively until it receives a |

|

Permanent error |

The web crawler assumes this error is permanent. It therefore does not index the document. Furthermore, if the document is present in the engine, the web crawler deletes the document. |

|

Temporary error |

The web crawler optimistically assumes this error will resolve in the future. If the engine already contains a document representing the page, the document is updated to indicate the page was inaccessible. After the page is inaccessible for three consecutive crawls, the document is deleted from the engine. |

Robots.txt file response code handling

edit| Code | Description | Web crawler behavior |

|---|---|---|

|

Success |

The web crawler processes and honors the directives for the domain within the robots.txt file. |

|

Redirect |

The web crawler follows redirects (including redirects to external domains) until it receives a |

|

Permanent error |

The web crawler assumes this error is permanent and no specific rules exist for the domain. It therefore allows all paths for the domain (subject to crawl rules). |

|

Temporary error |

The web crawler optimistically assumes this error will resolve in the future. However, in the interim, the crawler does not know which paths are allowed and disallowed. It therefore disallows all paths for the domain. |

Web crawler schema

editThe web crawler indexes search documents using the following schema. All fields are strings or arrays of strings.

-

additional_urls - The URLs of additional pages with the same content.

-

body_content -

The content of the page’s

<body>tag with all HTML tags removed. Truncated tocrawler.extraction.body_size.limit. -

content_hash - A "fingerprint" to uniquely identify this content, which is used to handle duplicate documents. See Duplicate document handling.

-

domains - The domains in which this content appears.

-

headings -

The text of the page’s HTML headings (

h1-h6elements). Limited bycrawler.extraction.headings_count.limit. -

id - The unique identifier for the page.

-

links -

Links found on the page.

Limited by

crawler.extraction.indexed_links_count.limit. -

meta_description -

The page’s description, taken from the

<meta name="description">tag. Truncated tocrawler.extraction.description_size.limit. -

meta_keywords -

The page’s keywords, taken from the

<meta name="keywords">tag. Truncated tocrawler.extraction.keywords_size.limit. -

title -

The title of the page, taken from the

<title>tag. Truncated tocrawler.extraction.title_size.limit. -

url - The URL of the page.

-

url_host - The hostname or IP from the page’s URL.

-

url_path - The full pathname from the page’s URL.

-

url_path_dir1 - The first segment of the pathname from the page’s URL.

-

url_path_dir2 - The second segment of the pathname from the page’s URL.

-

url_path_dir3 - The third segment of the pathname from the page’s URL.

-

url_port - The port number from the page’s URL (as a string).

-

url_scheme - The scheme of the page’s URL.

In addition to these predefined fields, you can also extract custom fields via meta tags and attributes.

Web crawler events logs

editThe web crawler logs many events while discovering, extracting, and indexing web content.

To view these events, see View web crawler events logs.

For a complete reference of all events, see Web crawler events logs reference.

Web crawler configuration settings

editOperators can configure several web crawler settings.

See Elastic crawler within the Enterprise Search configuration documentation.