Fleet Server scalability

This page summarizes the resource and Fleet Server configuration requirements needed to scale your deployment of Elastic Agents. To scale Fleet Server, you need to modify settings in your deployment and the Fleet Server agent policy.

Refer to the Scaling recommendations section for specific recommendations about using Fleet Server at scale.

First modify your Fleet deployment settings in Elastic Cloud:

Log in to Elastic Cloud and find your deployment.

Select Manage, then under the deployment's name in the navigation menu, click Edit.

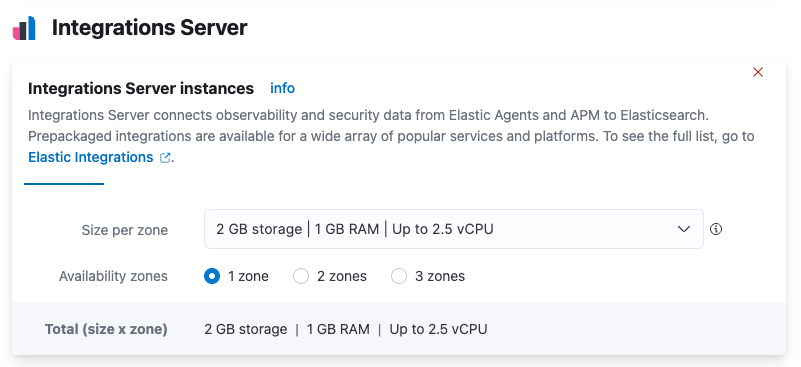

Under Integrations Server:

- Modify the compute resources available to the server to accommodate a higher scale of Elastic Agents

- Modify the availability zones to satisfy fault tolerance requirements

For recommended settings, refer to Scaling recommendations (Elastic Cloud).

Next modify the Fleet Server configuration by editing the agent policy:

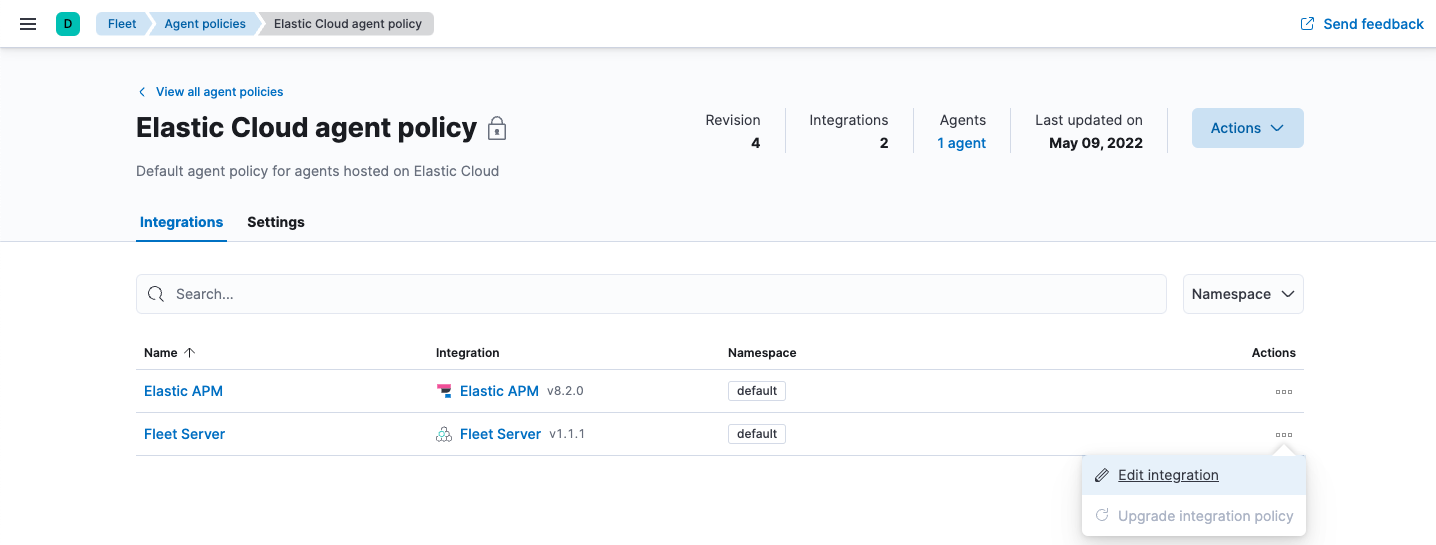

In Fleet, open the Agent policies tab. Click the name of the Elastic Cloud agent policy to edit the policy.

Open the Actions menu next to the Fleet Server integration and click Edit integration.

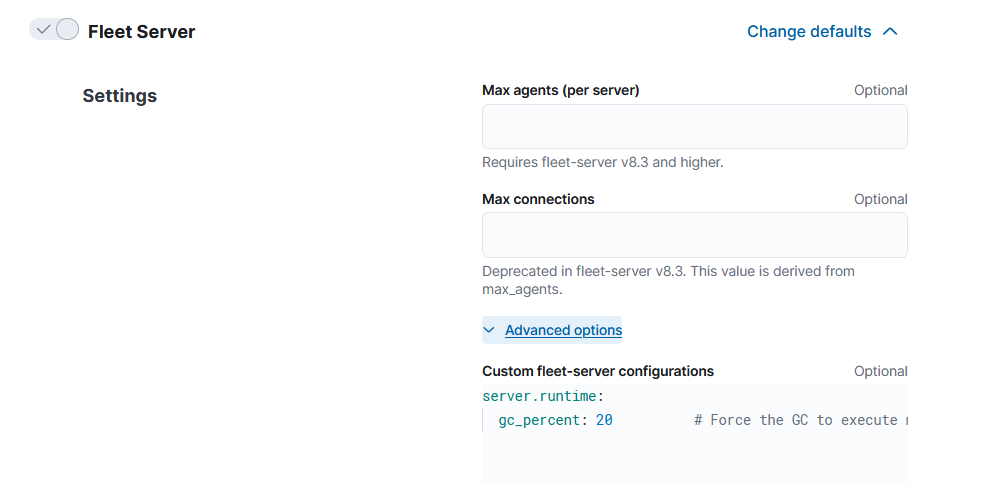

Under Fleet Server, modify Max Connections and other advanced settings as described in Scaling recommendations (Elastic Cloud).

The following advanced settings are available to fine tune your Fleet Server deployment.

cachenum_counters- Size of the hash table. Best practice is to have this set to 10 times the max connections.

max_cost- Total size of the cache.

server.timeoutscheckin_timestamp- How often Fleet Server updates the "last activity" field for each agent. Defaults to

30s. In a large-scale deployment, increasing this setting may improve performance. If this setting is higher than2m, most agents will be shown as "offline" in the Fleet UI. For a typical setup, it’s recommended that you set this value to less than2m. checkin_long_poll- How long Fleet Server allows a long poll request from an agent before timing out. Defaults to

5m. In a large-scale deployment, increasing this setting may improve performance. server.limitspolicy_throttle- How often a new policy is rolled out to the agents.

- Deprecated: Use the

action_limitsettings instead. action_limit.interval- How quickly Fleet Server dispatches pending actions to the agents.

action_limit.burst- Burst of actions that may be dispatched before falling back to the rate limit defined by

interval. checkin_limit.max- Maximum number of agents that can call the checkin API concurrently.

checkin_limit.interval- How fast the agents can check in to the Fleet Server.

checkin_limit.burst- Burst of check-ins allowed before falling back to the rate defined by

interval. checkin_limit.max_body_byte_size- Maximum size in bytes of the checkin API request body.

artifact_limit.max- Maximum number of agents that can call the artifact API concurrently. It allows the user to avoid overloading the Fleet Server from artifact API calls.

artifact_limit.interval- How often artifacts are rolled out. Default of

100msallows 10 artifacts to be rolled out per second. artifact_limit.burst- Number of transactions allowed for a burst, controlling oversubscription on outbound buffer.

artifact_limit.max_body_byte_size- Maximum size in bytes of the artifact API request body.

ack_limit.max- Maximum number of agents that can call the ack API concurrently. It allows the user to avoid overloading the Fleet Server from Ack API calls.

ack_limit.interval- How often an acknowledgment (ACK) is sent. Default value of

10msenables 100 ACKs per second to be sent. ack_limit.burst- Burst of ACKs to accommodate (default of 20) before falling back to the rate defined in

interval. ack_limit.max_body_byte_size- Maximum size in bytes of the ack API request body.

enroll_limit.max- Maximum number of agents that can call the enroll API concurrently. This setting allows the user to avoid overloading the Fleet Server from Enrollment API calls.

enroll_limit.interval- Interval between processing enrollment request. Enrollment is both CPU and RAM intensive, so the number of enrollment requests needs to be limited for overall system health. Default value of

100msallows 10 enrollments per second. enroll_limit.burst- Burst of enrollments to accept before falling back to the rate defined by

interval. enroll_limit.max_body_byte_size- Maximum size in bytes of the enroll API request body.

status_limit.max- Maximum number of agents that can call the status API concurrently. This setting allows the user to avoid overloading the Fleet Server from status API calls.

status_limit.interval- How frequently agents can submit status requests to the Fleet Server.

status_limit.burst- Burst of status requests to accommodate before falling back to the rate defined by interval.

status_limit.max_body_byte_size- Maximum size in bytes of the status API request body.

upload_start_limit.max- Maximum number of agents that can call the uploadStart API concurrently. This setting allows the user to avoid overloading the Fleet Server from uploadStart API calls.

upload_start_limit.interval- How frequently agents can submit file start upload requests to the Fleet Server.

upload_start_limit.burst- Burst of file start upload requests to accommodate before falling back to the rate defined by interval.

upload_start_limit.max_body_byte_size- Maximum size in bytes of the uploadStart API request body.

upload_end_limit.max- Maximum number of agents that can call the uploadEnd API concurrently. This setting allows the user to avoid overloading the Fleet Server from uploadEnd API calls.

upload_end_limit.interval- How frequently agents can submit file end upload requests to the Fleet Server.

upload_end_limit.burst- Burst of file end upload requests to accommodate before falling back to the rate defined by interval.

upload_end_limit.max_body_byte_size- Maximum size in bytes of the uploadEnd API request body.

upload_chunk_limit.max- Maximum number of agents that can call the uploadChunk API concurrently. This setting allows the user to avoid overloading the Fleet Server from uploadChunk API calls.

upload_chunk_limit.interval- How frequently agents can submit file chunk upload requests to the Fleet Server.

upload_chunk_limit.burst- Burst of file chunk upload requests to accommodate before falling back to the rate defined by interval.

upload_chunk_limit.max_body_byte_size- Maximum size in bytes of the uploadChunk API request body.

The following tables provide the minimum resource requirements and scaling guidelines based on the number of agents required by your deployment. It should be noted that these compute resource can be spread across multiple availability zones (for example, a 32GB RAM requirement can be satisfied with 16GB of RAM in 2 different zones).

| Number of agents | Fleet Server memory | Fleet Server vCPU | Elasticsearch hot tier |

|---|---|---|---|

| 2,000 | 2GB | up to 8 vCPU | 32GB RAM | 8 vCPU |

| 5,000 | 4GB | up to 8 vCPU | 32GB RAM | 8 vCPU |

| 10,000 | 8GB | up to 8 vCPU | 128GB RAM | 32 vCPU |

| 15,000 | 8GB | up to 8 vCPU | 256GB RAM | 64 vCPU |

| 25,000 | 8GB | up to 8 vCPU | 256GB RAM | 64 vCPU |

| 50,000 | 8GB | up to 8 vCPU | 384GB RAM | 96 vCPU |

| 75,000 | 8GB | up to 8 vCPU | 384GB RAM | 96 vCPU |

| 100,000 | 16GB | 16 vCPU | 512GB RAM | 128 vCPU |

A series of scale performance tests are regularly executed in order to verify the above requirements and the ability for Fleet to manage the advertised scale of Elastic Agents. These tests go through a set of acceptance criteria. The criteria mimics a typical platform operator workflow. The test cases are performing agent installations, version upgrades, policy modifications, and adding/removing integrations, tags, and policies. Acceptance criteria is passed when the Elastic Agents reach a Healthy state after any of these operations.

Elastic Agent policies

A single instance of Fleet supports a maximum of 1000 Elastic Agent policies. If more policies are configured, UI performance might be impacted. The maximum number of policies is not affected by the number of spaces in which the policies are used.

Elastic Agents

When you use Fleet to manage a large volume (10k or more) of Elastic Agents, the check-in from each of the multiple agents triggers an Elasticsearch authentication request. To help reduce the possibility of cache eviction and to speed up propagation of Elastic Agent policy changes and actions, we recommend setting the API key cache size in your Elasticsearch configuration to 2x the maximum number of agents.

For example, with 25,000 running Elastic Agents you could set the cache value to 50000:

xpack.security.authc.api_key.cache.max_keys: 50000