Generating alerts for anomaly detection jobs

This guide explains how to create alerts that notify you automatically when an anomaly is detected in a anomaly detection job, or when issues occur that affect job performance.

Kibana's alerting features support two types of machine learning rules, which run scheduled checks on your anomaly detection jobs:

- Anomaly detection alert

- Checks job results for anomalies that match your defined conditions and raises an alert when found.

- Anomaly detection jobs health

- Monitors the operational status of a job and alerts you if issues occur (such as a stopped datafeed or memory limit errors).

If you have created rules for specific anomaly detection jobs and you want to monitor whether these jobs work as expected, anomaly detection jobs health rules are ideal for this purpose.

If the conditions of a rule are met, an alert is created, and any associated actions (such as sending an email or Slack message) are triggered. For example, you can configure a rule that checks a job every 15 minutes for anomalies with a high score and sends a notification when one is found.

In Stack Management > Rules, you can create both types of machine learning rules. In the Machine Learning app, you can create only anomaly detection alert rules; create them from the anomaly detection job wizard after you start the job or from the anomaly detection job list.

Before you begin, make sure that:

- You have at least one running anomaly detection job.

- You have appropriate user permissions to create and manage alert rules.

- If you would like to send notifications about alerts (such as Slack messages, emails, or webhooks), make sure you have configured the necessary connectors.

Anomaly detection alert rules monitor if the anomaly detection job results contain anomalies that match the rule conditions.

To set up an anomaly detection alert rule:

Open Rules: find Stack Management > Rules in the main menu or use the global search field.

Select the Anomaly detection rule type.

Select the anomaly detection job that the rule applies to.

Select a type of machine learning result. You can create rules based on bucket, record, or influencer results.

(Optional) Configure the

anomaly_scorethat triggers the action. Theanomaly_scoreindicates the significance of a given anomaly compared to previous anomalies. The default severity threshold is 75 which means every anomaly with ananomaly_scoreof 75 or higher triggers the associated action.(Optional) To narrow down the list of anomalies that the rule looks for, add an Anomaly filter. This feature uses KQL and is only available for the Record and Influencer result types. In the Anomaly filter field, enter a KQL query that specifies fields or conditions to alert on. You can set up the following conditions:

- One or more partitioning or influencers fields in the anomaly results match the specified conditions

- The actual or typical scores in the anomalies match the specified conditions

For example, say you've set up alerting for an anomaly detection job that has

partition_field = "response.keyword"as the detector. If you were only interested in being alerted onresponse.keyword = 404, enterpartition_field_value: "404"into the Anomaly filter field. When the rule runs, it will only alert on anomalies withpartition_field_value: "404".NoteWhen you edit the KQL query, suggested filter-by fields appear. To compare actual and typical values for any fields, use operators such as

>(greater than),<(less than), or=(equal to).(Optional) Turn on Include interim results to include results that are created by the anomaly detection job before a bucket is finalized. These results might disappear after the bucket is fully processed. Include interim results to get notified earlier about potential anomalies, even if they might be false positives. Don't include interim results if you want to get notified only about anomalies of fully processed buckets.

(Optional) Configure Advanced settings:

- Configure the Lookback interval to define how far back to query previous anomalies during each condition check. Its value is derived from the bucket span of the job and the query delay of the datafeed by default. It is not recommended to set the lookback interval lower than the default value, as it might result in missed anomalies.

- Configure the Number of latest buckets to specify how many buckets to check to obtain the highest anomaly score found during the Lookback interval. The alert is created based on the highest scoring anomaly from the most anomalous bucket.

You can preview how the rule would perform on existing data:

- Define the check interval to specify how often the rule conditions are evaluated. It’s recommended to set this close to the job’s bucket span.

- Click Test.

The preview shows how many alerts would have been triggered during the selected time range.

- Set how often to check the rule conditions by selecting a time value and unit under Rule schedule.

- Specify the rule's scope, which determines the Kibana feature privileges that a role must have to access the rule and its alerts. Depending on your role's access, you can select one of the following:

-

All: (Default) Roles must have the appropriate privileges for one of the following features: - Infrastructure metrics (Observability > Infrastructure)

- Logs (Observability > Logs)

- APM (Observability > APM and User Experience)

- Synthetics (Observability > Synthetics and Uptime)

- Stack rules (Management > Stack Rules)

- Logs: Roles must have the appropriate Observability > Logs feature privileges.

- Metrics: Roles must have the appropriate Observability > Infrastructure feature privileges.

- Stack Management: Roles must have the appropriate Management > Stack Rules feature privileges.

For example, if you select All, a role with feature access to logs can view or edit the rule and its alerts from the Observability or the Stack Rules Rules page.

- (Optional) Configure Advanced options:

- Define the number of consecutive matches required before an alert is triggered under Alert delay.

- Enable or disable Flapping Detection to reduce noise from frequently changing alerts. You can customize the flapping detection settings if you need different thresholds for detecting flapping behavior.

Next, define the actions that occur when the rule conditions are met.

Anomaly detection jobs health rules monitor job health and alerts if an operational issue occurred that may prevent the job from detecting anomalies.

To set up an anomaly detection jobs alert rule:

- Open Rules: find Stack Management > Rules in the main menu or use the global search field.

- Select the Anomaly detection jobs rule type.

Include jobs and groups:

- Select the job or group that the rule applies to. If you add more jobs to the selected group later, they are automatically included the next time the rule conditions are checked. To apply the rule to all your jobs, you can use a special character (

*). This ensures that any jobs created after the rule is saved are automatically included. - (Optional) To exclude jobs that are not critically important, use the Exclude field.

- Select the job or group that the rule applies to. If you add more jobs to the selected group later, they are automatically included the next time the rule conditions are checked. To apply the rule to all your jobs, you can use a special character (

Enable the health check types you want to apply. All checks are enabled by default. At least one check needs to be enabled to create the rule. The following health checks are available:

Datafeed is not started: Notifies if the corresponding datafeed of the job is not started but the job is in an opened state. The notification message recommends the necessary actions to solve the error.

Model memory limit reached: Notifies if the model memory status of the job reaches the soft or hard model memory limit. Optimize your job by following these guidelines or consider amending the model memory limit.

Data delay has occurred: Notifies when the job missed some data. You can define the threshold for the amount of missing documents you get alerted on by setting Number of documents. You can control the lookback interval for checking delayed data with Time interval. Refer to the Handling delayed data page to see what to do about delayed data.

Errors in job messages: Notifies when the job messages contain error messages. Review the notification; it contains the error messages, the corresponding job IDs and recommendations on how to fix the issue. This check looks for job errors that occur after the rule is created; it does not look at historic behavior.

Set how often to check the rule conditions by selecting a time value and unit under Rule schedule. It is recommended to select an interval that is close to the bucket span of the job.

(Optional) Configure Advanced options:

- Define the number of consecutive matches required before an alert is triggered under Alert delay.

- Enable or disable Flapping Detection to reduce noise from frequently changing alerts. You can customize the flapping detection settings if you need different thresholds for detecting flapping behavior.

Next, define the actions that occur when the rule conditions are met.

You can send notifications when the rule conditions are met and when they are no longer met. These rules support:

- Alert summaries: Combine multiple alerts into a single notification, sent at regular intervals.

- Per-alert actions for anomaly detection: Trigger an action when an anomaly score meets the defined condition.

- Per-alert actions for job health: Trigger an action when an issue is detected in a job’s health status (for example, a stopped datafeed or memory issue).

- Recovery actions: Notify when a previously triggered alert returns to a normal state.

To set up an action:

- Select a connector.

Each action uses a connector, which stores connection information for a Kibana service or supported third-party integration, depending on where you want to send the notifications. For example, you can use a Slack connector to send a message to a channel. Or you can use an index connector that writes a JSON object to a specific index. For details about creating connectors, refer to Connectors.

Set the action frequency. Choose whether you want to send:

- Summary of alerts: Groups multiple alerts into a single notification at each check interval or on a custom schedule.

- A notification for each alert: Sends individual alerts as they are triggered, recovered, or change state.

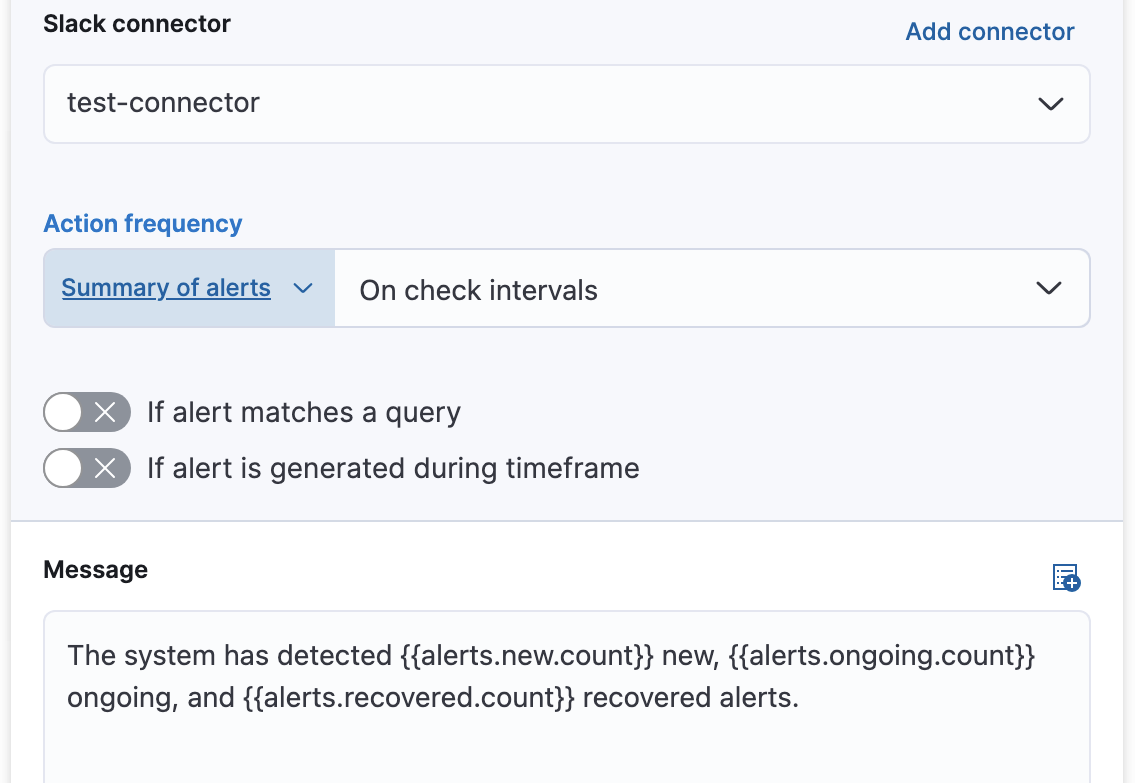

Example: Summary of alerts

You can choose to create a summary of alerts on:

- Each check interval: Sends a summary every time the rule runs (for example, every 5 minutes).

- Custom interval: Sends a summary less often, on a schedule you define (for example, every hour), which helps reduce notification noise. A custom action interval cannot be shorter than the rule's check interval.

For example, send slack notifications that summarize the new, ongoing, and recovered alerts:

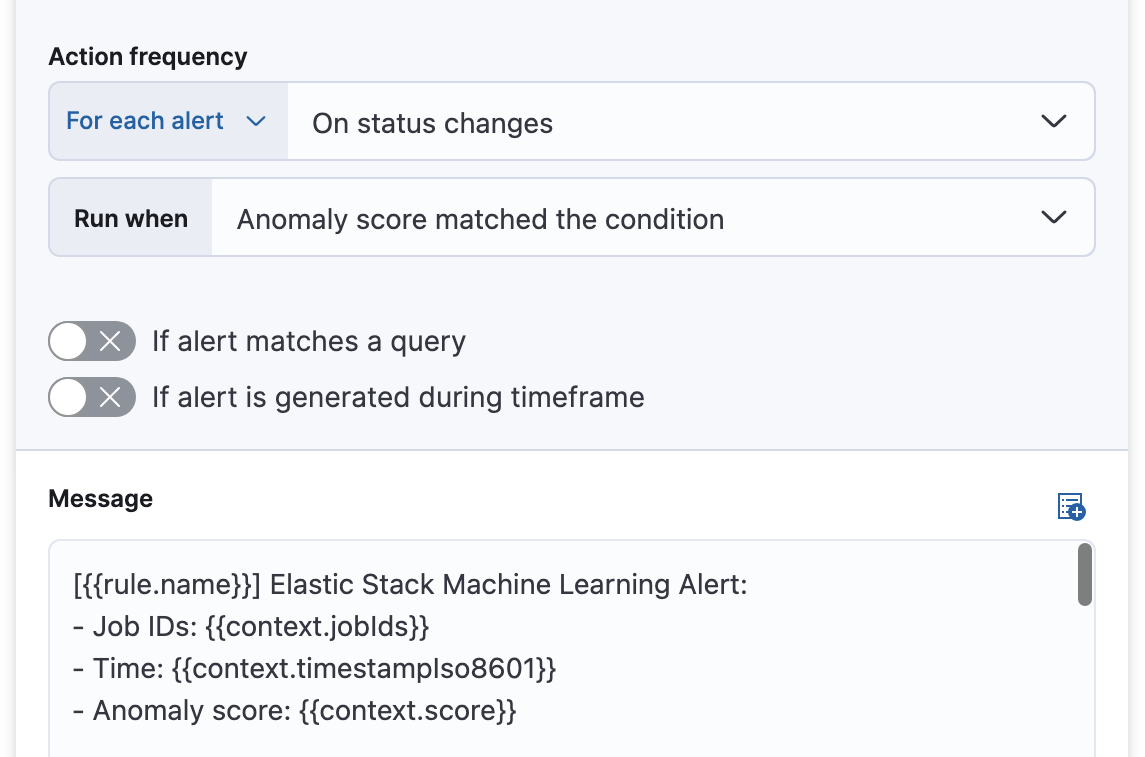

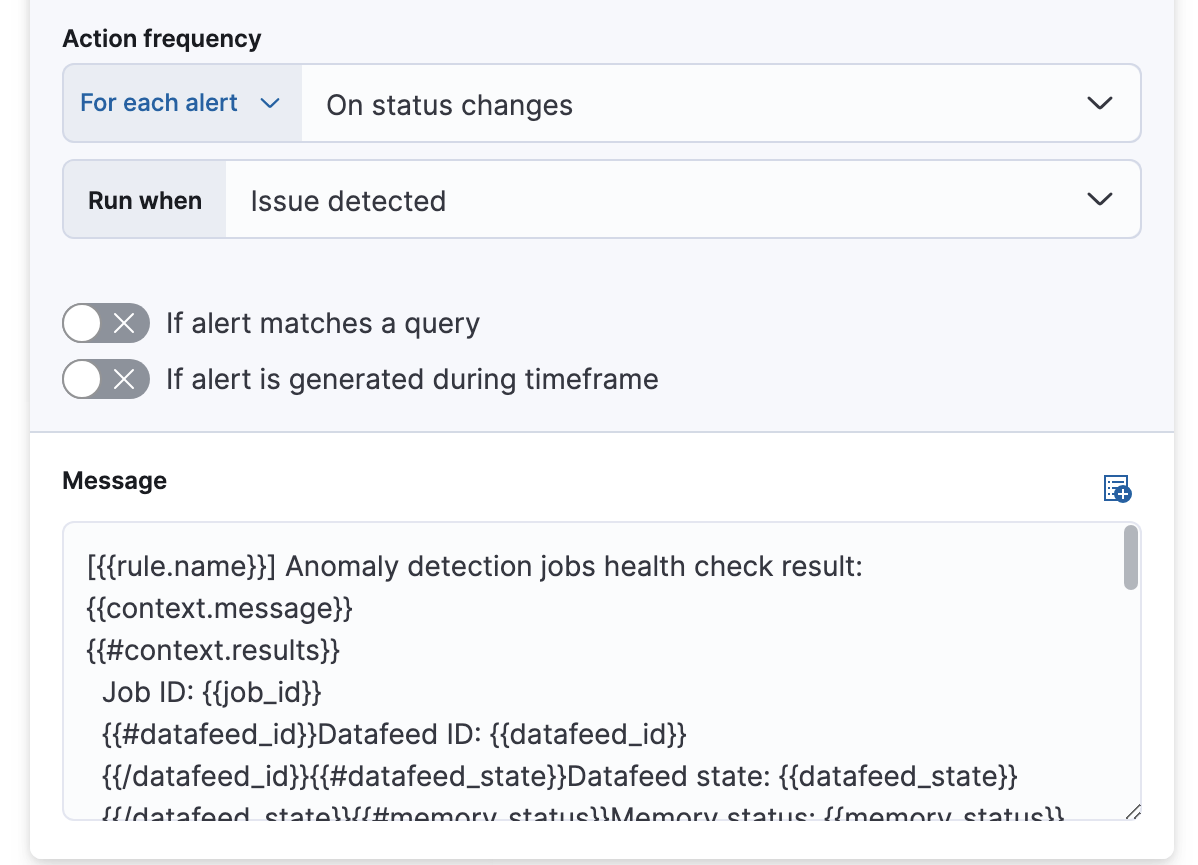

Example: For each alert

Choose how often the action runs:

- at each check interval,

- only when the alert status changes, or

- at a custom action interval.

For anomaly detection alert rules, you must also choose whether the action runs when the anomaly score matches the condition or when the alert recovers:

For anomaly detection jobs health rules, choose whether the action runs when the issue is detected or when it is recovered:

Specify that actions run only when they match a KQL query or occur within a specific time frame.

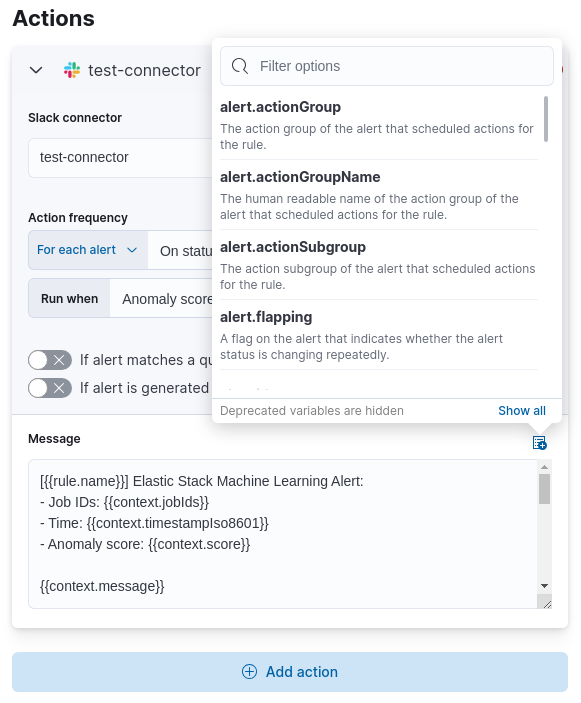

Use variables to customize the notification message. Click the icon above the message field to view available variables, or refer to action variables. For example:

After you save the configurations, the rule appears in the Stack Management > Rules list; you can check its status and see the overview of its configuration information.

When an alert occurs for an anomaly detection alert rule, it is always the same name as the job ID of the associated anomaly detection job that triggered it. You can review how the alerts that are occured correlate with the anomaly detection results in the Anomaly explorer by using the Anomaly timeline swimlane and the Alerts panel.

If necessary, you can snooze rules to prevent them from generating actions. For more details, refer to Snooze and disable rules.

The following variables are specific to the machine learning rule types. An asterisk (*)

marks the variables that you can use in actions related to recovered alerts.

You can also specify variables common to all rules.

Every anomaly detection alert has the following action variables:

context.anomalyExplorerUrl*- URL to open in the Anomaly Explorer.

context.isInterim- Indicates if top hits contain interim results.

context.jobIds*- List of job IDs that triggered the alert.

context.message*- A preconstructed message for the alert.

context.score- Anomaly score at the time of the notification action.

context.timestamp- The bucket timestamp of the anomaly.

context.timestampIso8601- The bucket timestamp of the anomaly in ISO8601 format.

context.topInfluencers- The list of top influencers. Limited to a maximum of 3 documents.

Properties of `context.topInfluencers`

influencer_field_name- The field name of the influencer.

influencer_field_value- The entity that influenced, contributed to, or was to blame for the anomaly.

score- The influencer score. A normalized score between 0–100 which shows the influencer’s overall contribution to the anomalies.

context.topRecords- The list of top records. Limited to a maximum of 3 documents.

Properties of `context.topRecords`

actual- The actual value for the bucket.

by_field_value- The value of the by field.

field_name- Certain functions require a field to operate on, for example,

sum(). For those functions, this value is the name of the field to be analyzed. function- The function in which the anomaly occurs, as specified in the detector configuration. For example,

max. over_field_name- The field used to split the data.

partition_field_value- The field used to segment the analysis.

score- A normalized score between 0–100, which is based on the probability of the anomalousness of this record.

typical- The typical value for the bucket, according to analytical modeling.

Every health check has two main variables: context.message and

context.results. The properties of context.results may vary based on the

type of check. You can find the possible properties for all the checks below.

context.message*- A preconstructed message for the alert.

context.results- Contains the following properties:

Properties of `context.results`

datafeed_id*- The datafeed identifier.

datafeed_state*- The state of the datafeed. It can be

starting,started,stopping, orstopped. job_id*- The job identifier.

job_state*- The state of the job. It can be

opening,opened,closing,closed, orfailed.

context.message*- A preconstructed message for the rule.

context.results- Contains the following properties:

Properties of `context.results`

job_id*- The job identifier.

memory_status*The status of the mathematical model. It can have one of the following values:

soft_limit: The model used more than 60% of the configured memory limit and older unused models will be pruned to free up space. In categorization jobs, no further category examples will be stored.hard_limit: The model used more space than the configured memory limit. As a result, not all incoming data was processed.

Thememory_statusisokfor recovered alerts.

model_bytes*- The number of bytes of memory used by the models.

model_bytes_exceeded*- The number of bytes over the high limit for memory usage at the last allocation failure.

model_bytes_memory_limit*- The upper limit for model memory usage.

log_time*- The timestamp of the model size statistics according to server time. Time formatting is based on the Kibana settings.

peak_model_bytes*- The peak number of bytes of memory ever used by the model.

context.message*- A preconstructed message for the rule.

context.results- For recovered alerts,

context.resultsis either empty (when there is no delayed data) or the same as for an active alert (when the number of missing documents is less than the Number of documents threshold set by the user).

Contains the following properties:

Properties of `context.results`

annotation*- The annotation corresponding to the data delay in the job.

end_timestamp*- Timestamp of the latest finalized buckets with missing documents. Time formatting is based on the Kibana settings.

job_id*- The job identifier.

missed_docs_count*- The number of missed documents.

context.message*- A preconstructed message for the rule.

context.results- Contains the following properties:

Properties of `context.results`

timestamp- Timestamp of the latest finalized buckets with missing documents.

job_id- The job identifier.

message- The error message.

node_name- The name of the node that runs the job.