- Machine Learning: other versions:

- Anomaly detection

- Data frame analytics

Finding outliers in the eCommerce sample data

editFinding outliers in the eCommerce sample data

editThis functionality is in beta and is subject to change. The design and code is less mature than official GA features and is being provided as-is with no warranties. Beta features are not subject to the support SLA of official GA features.

The goal of outlier detection is to find the most unusual documents in an index. Let’s try to detect unusual customer behavior in the eCommerce sample data set.

-

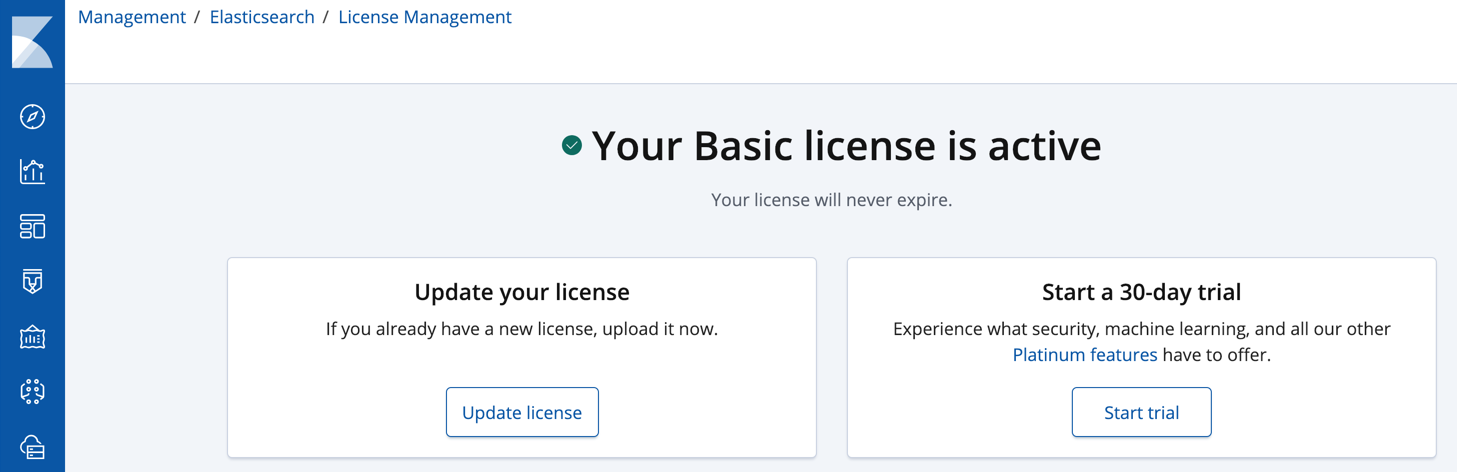

Obtain a license that includes the machine learning features.

By default, when you install Elastic Stack products, they apply basic licenses with no expiration dates. To view your license in Kibana, go to Management and click License Management.

For more information about Elastic license levels, see https://www.elastic.co/subscriptions.

You can start a 30-day trial to try out all of the platinum features, including machine learning features. Click Start trial on the License Management page in Kibana.

If your cluster has already activated a trial license for the current major version, you cannot start a new trial. For example, if you have already activated a trial for v6.0, you cannot start a new trial until v7.0.

At the end of the trial period, the platinum features operate in a degraded mode. You can revert to a basic license, extend the trial, or purchase a subscription.

-

If the Elasticsearch security features are enabled, obtain a user ID with sufficient privileges to complete these steps.

You need

manage_data_frame_transformscluster privileges to preview and create transforms. Members of the built-indata_frame_transforms_adminrole have these privileges.You must also be a member of the

machine_learning_adminbuilt-in role to create and manage data frame analytics jobs.You also need

readandview_index_metadataindex privileges on the source indices andread,create_index, andindexprivileges on the destination indices.For more information, see Security privileges and Built-in roles.

-

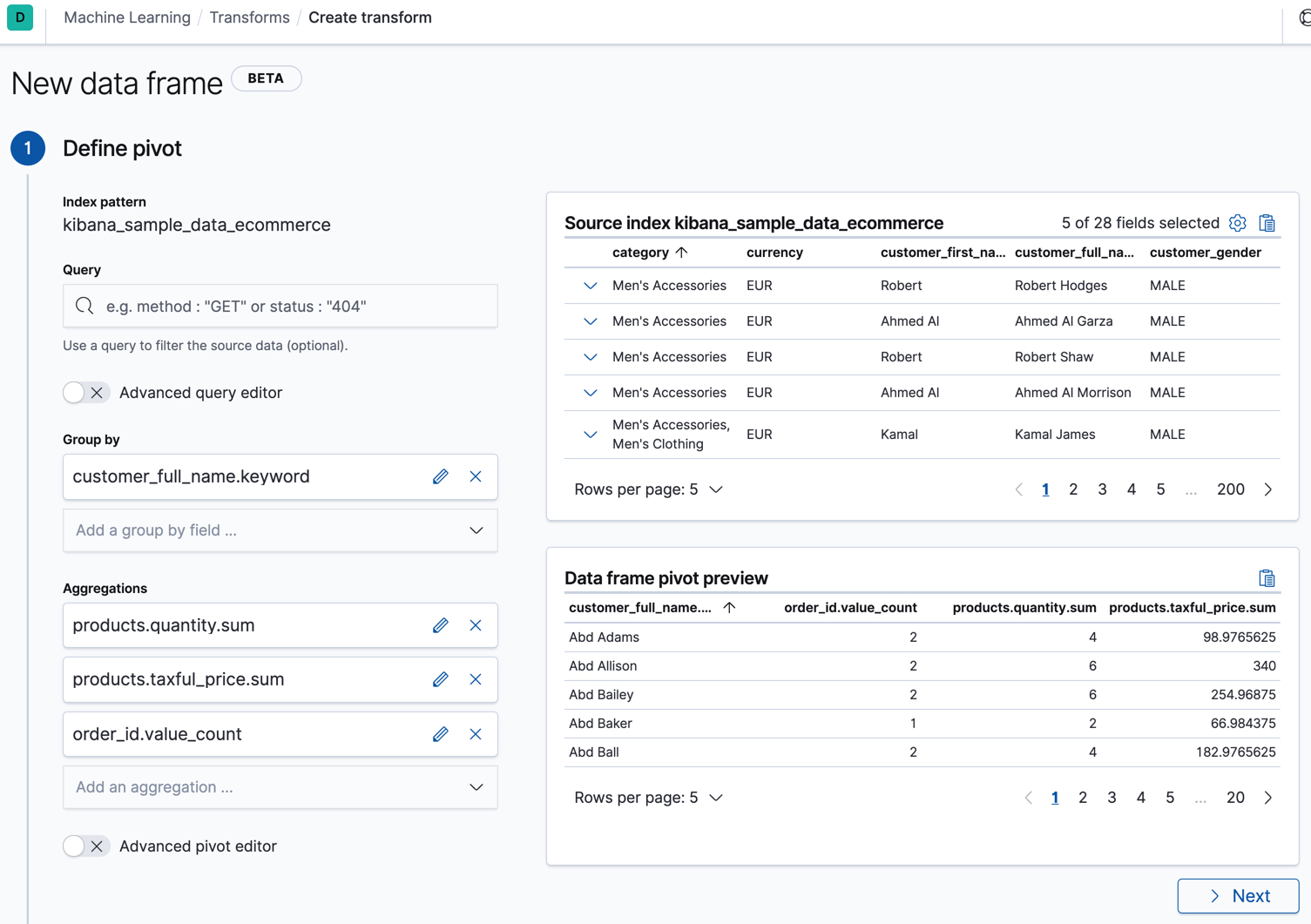

Create a transform that generates an entity-centric index with numeric or boolean data to analyze.

In this example, we’ll use the eCommerce orders sample data and pivot the data such that we get a new index that contains a sales summary for each customer.

In particular, create a transform that calculates the sum of the products (

products.quantity) and the sum of prices (products.taxful_price) in all of the orders, grouped by customer (customer_full_name). Also include a value count aggregation, so that we know how many orders (order_id) exist for each customer.You can preview the transform before you create it in Kibana:

Alternatively, you can preview and create the transform with the following APIs:

POST _data_frame/transforms/_preview { "source": { "index": [ "kibana_sample_data_ecommerce" ] }, "pivot": { "group_by": { "customer_full_name.keyword": { "terms": { "field": "customer_full_name.keyword" } } }, "aggregations": { "products.quantity.sum": { "sum": { "field": "products.quantity" } }, "products.taxful_price.sum": { "sum": { "field": "products.taxful_price" } }, "order_id.value_count": { "value_count": { "field": "order_id" } } } } } PUT _data_frame/transforms/ecommerce-customer-sales { "source": { "index": [ "kibana_sample_data_ecommerce" ] }, "pivot": { "group_by": { "customer_full_name.keyword": { "terms": { "field": "customer_full_name.keyword" } } }, "aggregations": { "products.quantity.sum": { "sum": { "field": "products.quantity" } }, "products.taxful_price.sum": { "sum": { "field": "products.taxful_price" } }, "order_id.value_count": { "value_count": { "field": "order_id" } } } }, "description": "E-commerce sales by customer", "dest": { "index": "ecommerce-customer-sales" } }

For more details about creating transforms, see Transforming the eCommerce sample data.

-

Start the transform.

Even though resource utilization is automatically adjusted based on the cluster load, a transform increases search and indexing load on your cluster while it runs. If you’re experiencing an excessive load, however, you can stop it.

You can start, stop, and manage transforms in Kibana. Alternatively, you can use the start transforms API. For example:

POST _data_frame/transforms/ecommerce-customer-sales/_start

-

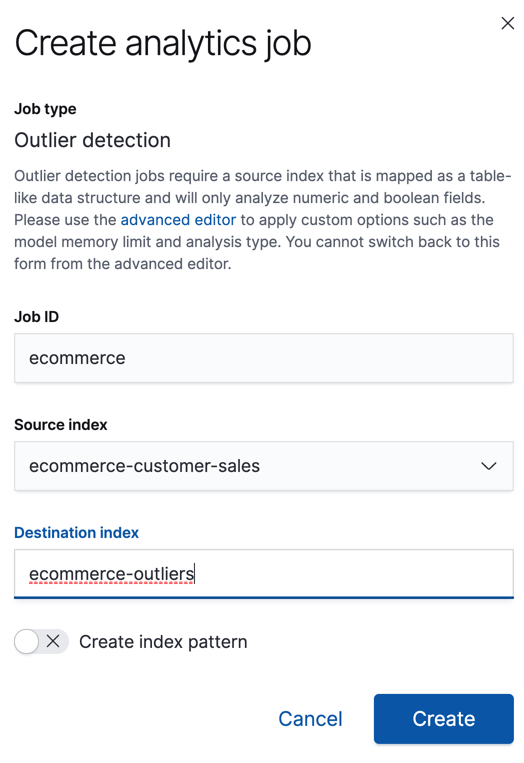

Create a data frame analytics job to detect outliers in the new entity-centric index.

There is a wizard for creating data frame analytics jobs on the Machine Learning > Analytics page in Kibana:

Alternatively, you can use the create data frame analytics jobs API. For example:

PUT _ml/data_frame/analytics/ecommerce { "source": { "index": "ecommerce-customer-sales" }, "dest": { "index": "ecommerce-outliers" }, "analysis": { "outlier_detection": { } }, "analyzed_fields" : { "includes" : ["products.quantity.sum","products.taxful_price.sum","order_id.value_count"] } }

-

Start the data frame analytics job.

You can start, stop, and manage data frame analytics jobs on the Machine Learning > Analytics page in Kibana. Alternatively, you can use the start data frame analytics jobs and stop data frame analytics jobs APIs. For example:

POST _ml/data_frame/analytics/ecommerce/_start

-

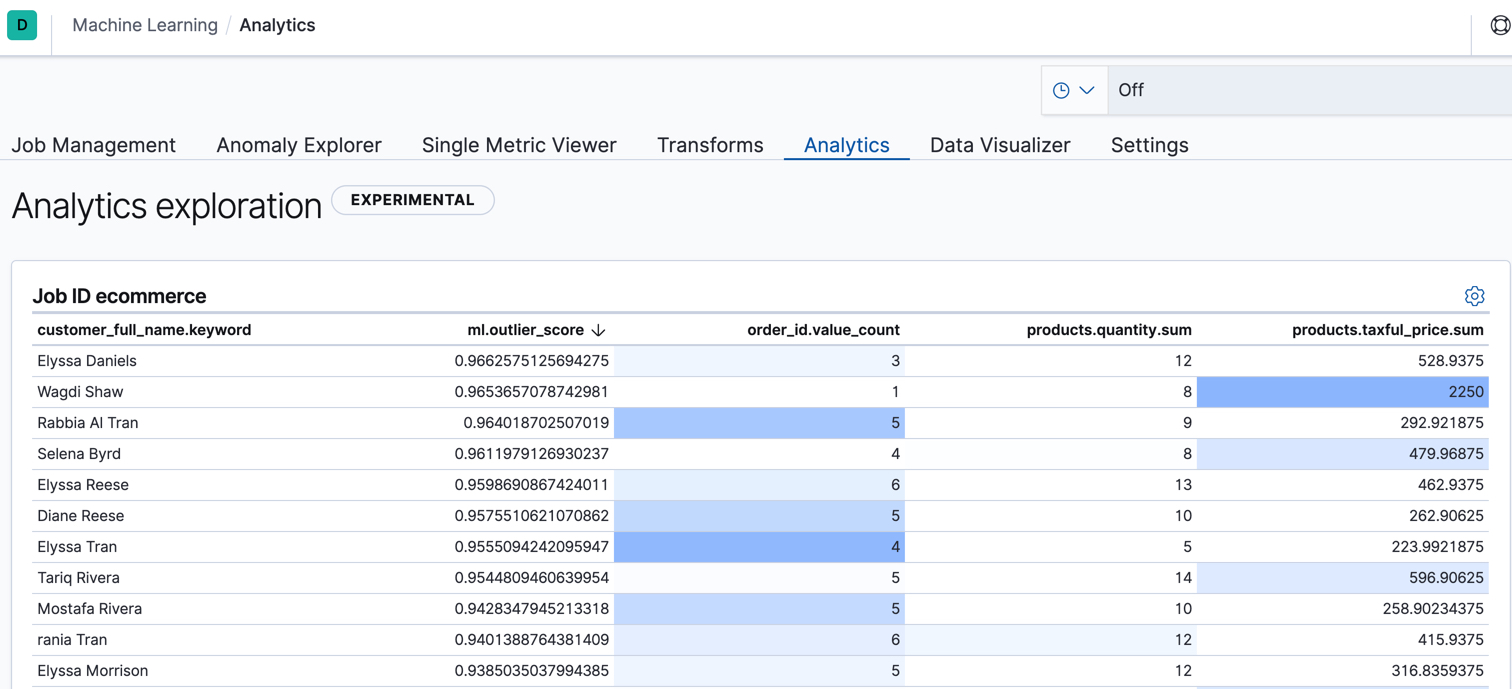

View the results of the outlier detection analysis.

The data frame analytics job creates an index that contains the original data and outlier scores for each document. The outlier score indicates how different each entity is from other entities.

In Kibana, you can view the results from the data frame analytics job and sort them on the outlier score:

The

ml.outlierscore is a value between 0 and 1. The larger the value, the more likely they are to be an outlier.In addition to an overall outlier score, each document is annotated with feature influence values for each field. These values add up to 1 and indicate which fields are the most important in deciding whether an entity is an outlier or inlier. For example, the dark shading on the

products.taxful_price.sumfield for Wagdi Shaw indicates that the sum of the product prices was the most influential feature in determining that Wagdi is an outlier.If you want to see the exact feature influence values, you can retrieve them from the index that is associated with your data frame analytics job. For example:

GET ecommerce-outliers/_search?q="Wagdi Shaw"

The search results include the following outlier detection scores:

... "ml" :{ "outlier_score" : 0.9653657078742981, "feature_influence.products.quantity.sum" : 0.00592468399554491, "feature_influence.order_id.value_count" : 0.01975759118795395, "feature_influence.products.taxful_price.sum" : 0.974317729473114 } ...

Now that you’ve found unusual behavior in the sample data set, consider how you might apply these steps to other data sets. If you have data that is already marked up with true outliers, you can determine how well the outlier detection algorithms perform by using the evaluate data frame analytics API. See Evaluating data frame analytics.

If you do not want to keep the transform and the data frame analytics job, you can delete them in Kibana or use the delete transform API and delete data frame analytics job API. When you delete transforms and data frame analytics jobs, the destination indices and Kibana index patterns remain.