Categorizing log messages

editCategorizing log messages

editApplication log events are often unstructured and contain variable data. For example:

{"time":1454516381000,"message":"org.jdbi.v2.exceptions.UnableToExecuteStatementException: com.mysql.jdbc.exceptions.MySQLTimeoutException: Statement cancelled due to timeout or client request [statement:\"SELECT id, customer_id, name, force_disabled, enabled FROM customers\"]","type":"logs"}

You can use machine learning to observe the static parts of the message, cluster similar messages together, and classify them into message categories.

The machine learning model learns what volume and pattern is normal for each category over time. You can then detect anomalies and surface rare events or unusual types of messages by using count or rare functions. For example:

PUT _ml/anomaly_detectors/it_ops_new_logs { "description" : "IT Ops Application Logs", "analysis_config" : { "categorization_field_name": "message", "bucket_span":"30m", "detectors" :[{ "function":"count", "by_field_name": "mlcategory", "detector_description": "Unusual message counts" }], "categorization_filters":[ "\\[statement:.*\\]"] }, "analysis_limits":{ "categorization_examples_limit": 5 }, "data_description" : { "time_field":"time", "time_format": "epoch_ms" } }

|

The |

|

|

The resulting categories are used in a detector by setting |

The optional categorization_examples_limit property specifies the

maximum number of examples that are stored in memory and in the results data

store for each category. The default value is 4. Note that this setting does

not affect the categorization; it just affects the list of visible examples. If

you increase this value, more examples are available, but you must have more

storage available. If you set this value to 0, no examples are stored.

The optional categorization_filters property can contain an array of regular

expressions. If a categorization field value matches the regular expression, the

portion of the field that is matched is not taken into consideration when

defining categories. The categorization filters are applied in the order they

are listed in the job configuration, which allows you to disregard multiple

sections of the categorization field value. In this example, we have decided that

we do not want the detailed SQL to be considered in the message categorization.

This particular categorization filter removes the SQL statement from the categorization

algorithm.

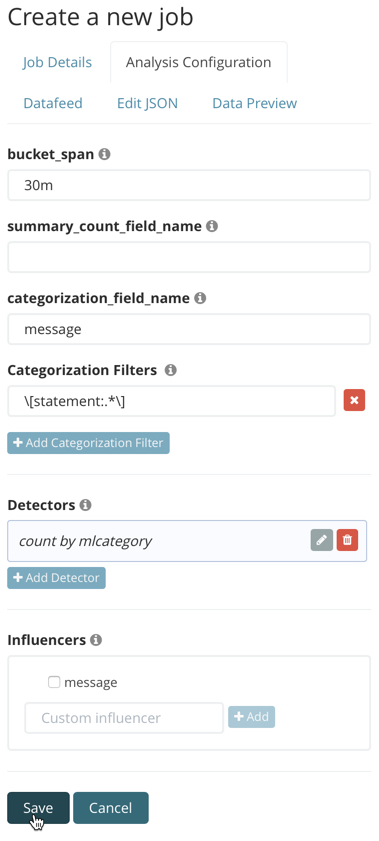

If your data is stored in Elasticsearch, you can create an advanced anomaly detection job with these same properties:

To add the categorization_examples_limit property, you must use the

Edit JSON tab and copy the analysis_limits object from the API example.

Customizing the categorization analyzer

editCategorization uses English dictionary words to identify log message categories. By default, it also uses English tokenization rules. For this reason, if you use the default categorization analyzer, only English language log messages are supported, as described in the Limitations.

You can, however, change the tokenization rules by customizing the way the categorization field values are interpreted. For example:

PUT _ml/anomaly_detectors/it_ops_new_logs2 { "description" : "IT Ops Application Logs", "analysis_config" : { "categorization_field_name": "message", "bucket_span":"30m", "detectors" :[{ "function":"count", "by_field_name": "mlcategory", "detector_description": "Unusual message counts" }], "categorization_analyzer":{ "char_filter": [ { "type": "pattern_replace", "pattern": "\\[statement:.*\\]" } ], "tokenizer": "ml_classic", "filter": [ { "type" : "stop", "stopwords": [ "Monday", "Tuesday", "Wednesday", "Thursday", "Friday", "Saturday", "Sunday", "Mon", "Tue", "Wed", "Thu", "Fri", "Sat", "Sun", "January", "February", "March", "April", "May", "June", "July", "August", "September", "October", "November", "December", "Jan", "Feb", "Mar", "Apr", "May", "Jun", "Jul", "Aug", "Sep", "Oct", "Nov", "Dec", "GMT", "UTC" ] } ] } }, "analysis_limits":{ "categorization_examples_limit": 5 }, "data_description" : { "time_field":"time", "time_format": "epoch_ms" } }

|

The

|

|

|

The |

|

|

By default, English day or month words are filtered from log messages before categorization. If your logs are in a different language and contain dates, you might get better results by filtering the day or month words in your language. |

The optional categorization_analyzer property allows even greater customization

of how categorization interprets the categorization field value. It can refer to

a built-in Elasticsearch analyzer or a combination of zero or more character filters,

a tokenizer, and zero or more token filters. If you omit the

categorization_analyzer, the following default values are used:

POST _ml/anomaly_detectors/_validate { "analysis_config" : { "categorization_analyzer" : { "tokenizer" : "ml_classic", "filter" : [ { "type" : "stop", "stopwords": [ "Monday", "Tuesday", "Wednesday", "Thursday", "Friday", "Saturday", "Sunday", "Mon", "Tue", "Wed", "Thu", "Fri", "Sat", "Sun", "January", "February", "March", "April", "May", "June", "July", "August", "September", "October", "November", "December", "Jan", "Feb", "Mar", "Apr", "May", "Jun", "Jul", "Aug", "Sep", "Oct", "Nov", "Dec", "GMT", "UTC" ] } ] }, "categorization_field_name": "message", "detectors" :[{ "function":"count", "by_field_name": "mlcategory" }] }, "data_description" : { } }

If you specify any part of the categorization_analyzer, however, any omitted

sub-properties are not set to default values.

The ml_classic tokenizer and the day and month stopword filter are more or less

equivalent to the following analyzer, which is defined using only built-in Elasticsearch

tokenizers and

token filters:

PUT _ml/anomaly_detectors/it_ops_new_logs3 { "description" : "IT Ops Application Logs", "analysis_config" : { "categorization_field_name": "message", "bucket_span":"30m", "detectors" :[{ "function":"count", "by_field_name": "mlcategory", "detector_description": "Unusual message counts" }], "categorization_analyzer":{ "tokenizer": { "type" : "simple_pattern_split", "pattern" : "[^-0-9A-Za-z_.]+" }, "filter": [ { "type" : "pattern_replace", "pattern": "^[0-9].*" }, { "type" : "pattern_replace", "pattern": "^[-0-9A-Fa-f.]+$" }, { "type" : "pattern_replace", "pattern": "^[^0-9A-Za-z]+" }, { "type" : "pattern_replace", "pattern": "[^0-9A-Za-z]+$" }, { "type" : "stop", "stopwords": [ "", "Monday", "Tuesday", "Wednesday", "Thursday", "Friday", "Saturday", "Sunday", "Mon", "Tue", "Wed", "Thu", "Fri", "Sat", "Sun", "January", "February", "March", "April", "May", "June", "July", "August", "September", "October", "November", "December", "Jan", "Feb", "Mar", "Apr", "May", "Jun", "Jul", "Aug", "Sep", "Oct", "Nov", "Dec", "GMT", "UTC" ] } ] } }, "analysis_limits":{ "categorization_examples_limit": 5 }, "data_description" : { "time_field":"time", "time_format": "epoch_ms" } }

|

Tokens basically consist of hyphens, digits, letters, underscores and dots. |

|

|

By default, categorization ignores tokens that begin with a digit. |

|

|

By default, categorization also ignores tokens that are hexadecimal numbers. |

|

|

Underscores, hyphens, and dots are removed from the beginning of tokens. |

|

|

Underscores, hyphens, and dots are also removed from the end of tokens. |

The key difference between the default categorization_analyzer and this example

analyzer is that using the ml_classic tokenizer is several times faster. The

difference in behavior is that this custom analyzer does not include accented

letters in tokens whereas the ml_classic tokenizer does, although that could

be fixed by using more complex regular expressions.

If you are categorizing non-English messages in a language where words are separated by spaces, you might get better results if you change the day or month words in the stop token filter to the appropriate words in your language. If you are categorizing messages in a language where words are not separated by spaces, you must use a different tokenizer as well in order to get sensible categorization results.

It is important to be aware that analyzing for categorization of machine generated log messages is a little different from tokenizing for search. Features that work well for search, such as stemming, synonym substitution, and lowercasing are likely to make the results of categorization worse. However, in order for drill down from machine learning results to work correctly, the tokens that the categorization analyzer produces must be similar to those produced by the search analyzer. If they are sufficiently similar, when you search for the tokens that the categorization analyzer produces then you find the original document that the categorization field value came from.

To add the categorization_analyzer property in Kibana, you must use the

Edit JSON tab and copy the categorization_analyzer object from one of the

API examples above.

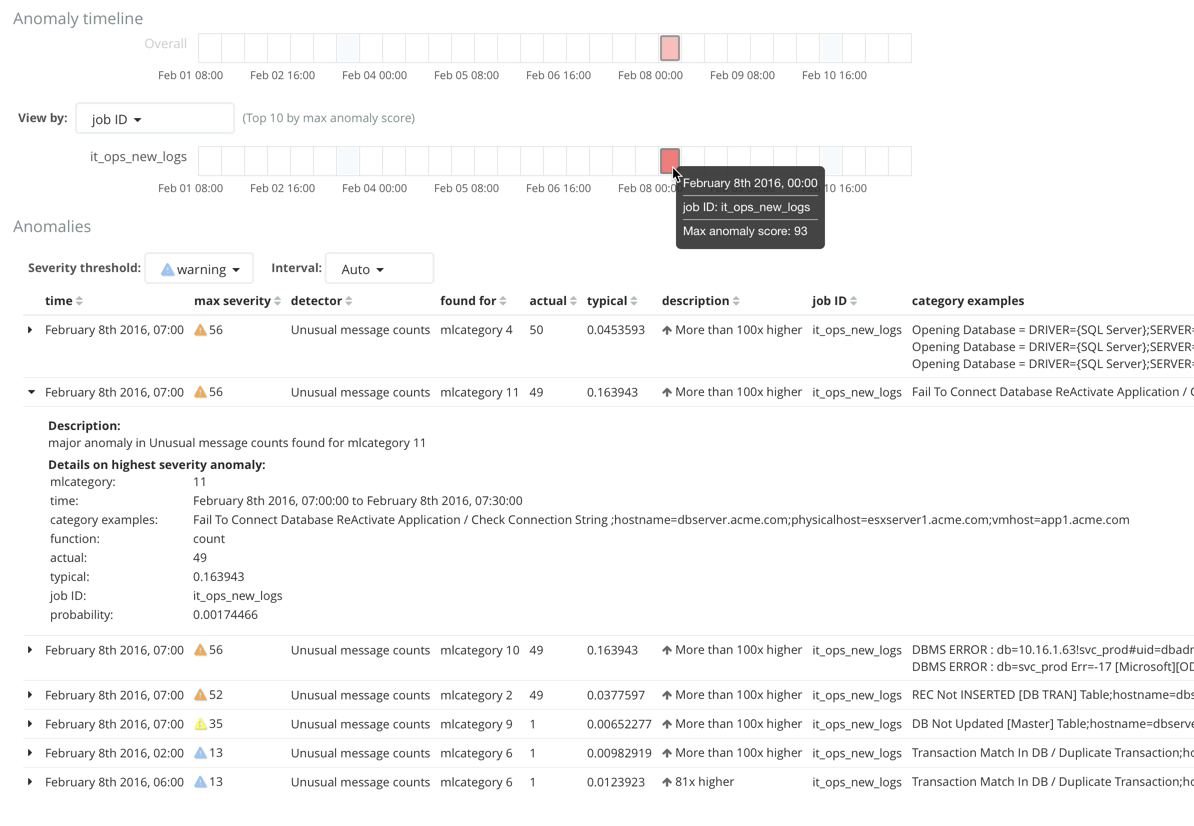

Viewing categorization results

editAfter you open the job and start the datafeed or supply data to the job, you can view the categorization results in Kibana. For example:

For this type of job, the Anomaly Explorer contains extra information for

each anomaly: the name of the category (for example, mlcategory 11) and

examples of the messages in that category. In this case, you can use these

details to investigate occurrences of unusually high message counts for specific

message categories.