- Filebeat Reference: other versions:

- Overview

- Getting Started With Filebeat

- Setting up and running Filebeat

- Upgrading Filebeat

- How Filebeat works

- Configuring Filebeat

- Specify which modules to run

- Configure inputs

- Manage multiline messages

- Specify general settings

- Load external configuration files

- Configure the internal queue

- Configure the output

- Configure index lifecycle management

- Load balance the output hosts

- Specify SSL settings

- Filter and enhance the exported data

- Define processors

- Add cloud metadata

- Add Docker metadata

- Add fields

- Add Host metadata

- Add Kubernetes metadata

- Add labels

- Add the local time zone

- Add Observer metadata

- Add process metadata

- Add tags

- Community ID Network Flow Hash

- Convert

- Decode Base64 fields

- Decode CEF

- Decode CSV fields

- Decode JSON fields

- Decompress gzip fields

- Dissect strings

- DNS Reverse Lookup

- Drop events

- Drop fields from events

- Extract array

- Keep fields from events

- Registered Domain

- Rename fields from events

- Script Processor

- Timestamp

- Parse data by using ingest node

- Enrich events with geoIP information

- Configure project paths

- Configure the Kibana endpoint

- Load the Kibana dashboards

- Load the Elasticsearch index template

- Configure logging

- Use environment variables in the configuration

- Autodiscover

- YAML tips and gotchas

- Regular expression support

- HTTP Endpoint

- filebeat.reference.yml

- Beats central management

- Modules

- Modules overview

- Apache module

- Auditd module

- AWS module

- azure module

- CEF module

- Cisco module

- Coredns Module

- Elasticsearch module

- Envoyproxy Module

- Google Cloud module

- haproxy module

- IBM MQ module

- Icinga module

- IIS module

- Iptables module

- Kafka module

- Kibana module

- Logstash module

- MISP module

- MongoDB module

- MSSQL module

- MySQL module

- nats module

- NetFlow module

- Nginx module

- Osquery module

- Palo Alto Networks module

- PostgreSQL module

- RabbitMQ module

- Redis module

- Santa module

- Suricata module

- System module

- Traefik module

- Zeek (Bro) Module

- Exported fields

- Apache fields

- Auditd fields

- AWS fields

- Azure fields

- Beat fields

- Decode CEF processor fields fields

- CEF fields

- Cisco fields

- Cloud provider metadata fields

- Coredns fields

- Docker fields

- ECS fields

- elasticsearch fields

- Envoyproxy fields

- Google Cloud fields

- haproxy fields

- Host fields

- ibmmq fields

- Icinga fields

- IIS fields

- iptables fields

- Jolokia Discovery autodiscover provider fields

- Kafka fields

- kibana fields

- Kubernetes fields

- Log file content fields

- logstash fields

- MISP fields

- mongodb fields

- mssql fields

- MySQL fields

- nats fields

- NetFlow fields

- NetFlow fields

- Nginx fields

- Osquery fields

- panw fields

- PostgreSQL fields

- Process fields

- RabbitMQ fields

- Redis fields

- s3 fields

- Google Santa fields

- Suricata fields

- System fields

- Traefik fields

- Zeek fields

- Monitoring Filebeat

- Securing Filebeat

- Troubleshooting

- Get help

- Debug

- Common problems

- Can’t read log files from network volumes

- Filebeat isn’t collecting lines from a file

- Too many open file handlers

- Registry file is too large

- Inode reuse causes Filebeat to skip lines

- Log rotation results in lost or duplicate events

- Open file handlers cause issues with Windows file rotation

- Filebeat is using too much CPU

- Dashboard in Kibana is breaking up data fields incorrectly

- Fields are not indexed or usable in Kibana visualizations

- Filebeat isn’t shipping the last line of a file

- Filebeat keeps open file handlers of deleted files for a long time

- Filebeat uses too much bandwidth

- Error loading config file

- Found unexpected or unknown characters

- Logstash connection doesn’t work

- @metadata is missing in Logstash

- Not sure whether to use Logstash or Beats

- SSL client fails to connect to Logstash

- Monitoring UI shows fewer Beats than expected

- Contributing to Beats

Manage multiline messages

editManage multiline messages

editThe files harvested by Filebeat may contain messages that span multiple

lines of text. For example, multiline messages are common in files that contain

Java stack traces. In order to correctly handle these multiline events, you need

to configure multiline settings in the filebeat.yml file to specify

which lines are part of a single event.

If you are sending multiline events to Logstash, use the options described here to handle multiline events before sending the event data to Logstash. Trying to implement multiline event handling in Logstash (for example, by using the Logstash multiline codec) may result in the mixing of streams and corrupted data.

Also read YAML tips and gotchas and Regular expression support to avoid common mistakes.

Configuration options

editYou can specify the following options in the filebeat.inputs section of

the filebeat.yml config file to control how Filebeat deals with messages

that span multiple lines.

The following example shows how to configure Filebeat to handle a multiline message where the first line of the message begins with a bracket ([).

multiline.pattern: '^\[' multiline.negate: true multiline.match: after

Filebeat takes all the lines that do not start with [ and combines them with the previous line that does. For example, you could use this configuration to join the following lines of a multiline message into a single event:

[beat-logstash-some-name-832-2015.11.28] IndexNotFoundException[no such index] at org.elasticsearch.cluster.metadata.IndexNameExpressionResolver$WildcardExpressionResolver.resolve(IndexNameExpressionResolver.java:566) at org.elasticsearch.cluster.metadata.IndexNameExpressionResolver.concreteIndices(IndexNameExpressionResolver.java:133) at org.elasticsearch.cluster.metadata.IndexNameExpressionResolver.concreteIndices(IndexNameExpressionResolver.java:77) at org.elasticsearch.action.admin.indices.delete.TransportDeleteIndexAction.checkBlock(TransportDeleteIndexAction.java:75)

-

multiline.pattern -

Specifies the regular expression pattern to match. Note that the regexp patterns supported by Filebeat

differ somewhat from the patterns supported by Logstash. See Regular expression support for a list of supported regexp patterns.

Depending on how you configure other multiline options, lines that match the specified regular expression are considered

either continuations of a previous line or the start of a new multiline event. You can set the

negateoption to negate the pattern. -

multiline.negate -

Defines whether the pattern is negated. The default is

false. -

multiline.match -

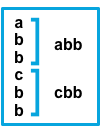

Specifies how Filebeat combines matching lines into an event. The settings are

afterorbefore. The behavior of these settings depends on what you specify fornegate:Setting for negateSetting for matchResult Example pattern: ^bfalseafterConsecutive lines that match the pattern are appended to the previous line that doesn’t match.

falsebeforeConsecutive lines that match the pattern are prepended to the next line that doesn’t match.

trueafterConsecutive lines that don’t match the pattern are appended to the previous line that does match.

truebeforeConsecutive lines that don’t match the pattern are prepended to the next line that does match.

The

aftersetting is equivalent topreviousin Logstash, andbeforeis equivalent tonext. -

multiline.flush_pattern - Specifies a regular expression, in which the current multiline will be flushed from memory, ending the multiline-message.

-

multiline.max_lines -

The maximum number of lines that can be combined into one event. If

the multiline message contains more than

max_lines, any additional lines are discarded. The default is 500. -

multiline.timeout - After the specified timeout, Filebeat sends the multiline event even if no new pattern is found to start a new event. The default is 5s.

On this page