From vector search to powerful REST APIs, Elasticsearch offers developers the most extensive search toolkit. Dive into our sample notebooks in the Elasticsearch Labs repo to try something new. You can also start your free trial or run Elasticsearch locally today.

Playground is a low-code interface that enables developers to iterate and build production RAG applications by A/B testing LLMs, tuning prompts, and chunking data. With support for Amazon Bedrock, Playground brings you a wider selection of foundation models from Amazon, Anthropic, and other leading providers. Developers using Amazon Bedrock and Elasticsearch can refine retrieval to ground answers with private or proprietary data, indexed into one or more Elasticsearch indices.

A/B test LLMs & retrieval with Playground inference via Amazon Bedrock

The playground interface allows you to experiment and A/B test different LLMs from leading model providers such as Amazon and Anthropic. However, picking a model is only a part of the problem. Developers must also consider how to retrieve relevant search results to closely match a model’s context window size (i.e. the number of tokens a model can process). Retrieving text passages longer than the context window can lead to truncation, therefore loss of information. Text that is smaller than the context window may not embed correctly, making the representation inaccurate. The next bit of complexity may arise from having to combine retrieval from different data sources.

Playground brings together a number of Elasticsearch features into a simple, yet powerful interface for tuning RAG workflows:

- work with a growing list of model sources, including Amazon Bedrock, for choosing the best LLM for your needs

- use

semantic_text, for tuning chunking strategies to fit data and context window size - use retrievers to add multi-stage retrieval pipelines (including re-raking)

After tuning the context sent to the models to desired production standards, you can export the code and finalize your application with your Python Elasticsearch language client or LangChain Python integration.

Today’s announcement, brings access to hosted models on Amazon Bedrock through the Open Inference API integration, and the ability to use the new semantic_text field type. We really hope you enjoy this experience!

Playground takes all these composable elements and brings to you a true developer toolset for rapid iteration and development to match the pace that developers need.

Using Elastic's Playground

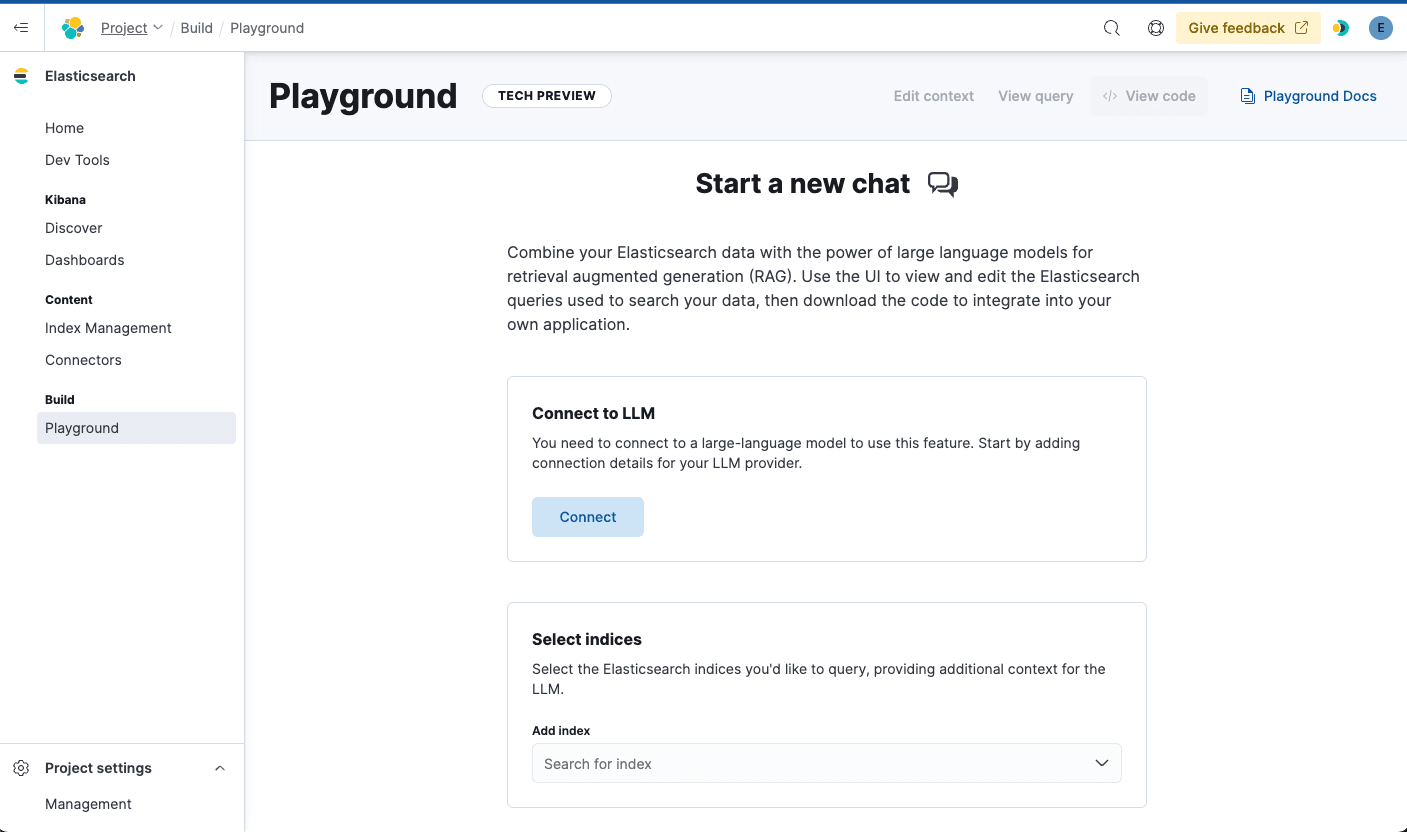

Within Kibana (the Elasticsearch UI), navigate to “Playground” from the navigation page on the left-hand side. To start, you need to connect to a model provider to bring your LLM of choice. Playground supports chat completion models such as Anthropic through Amazon Bedrock.

This blog provides detailed steps and instructions to connect and configure the playground experience.

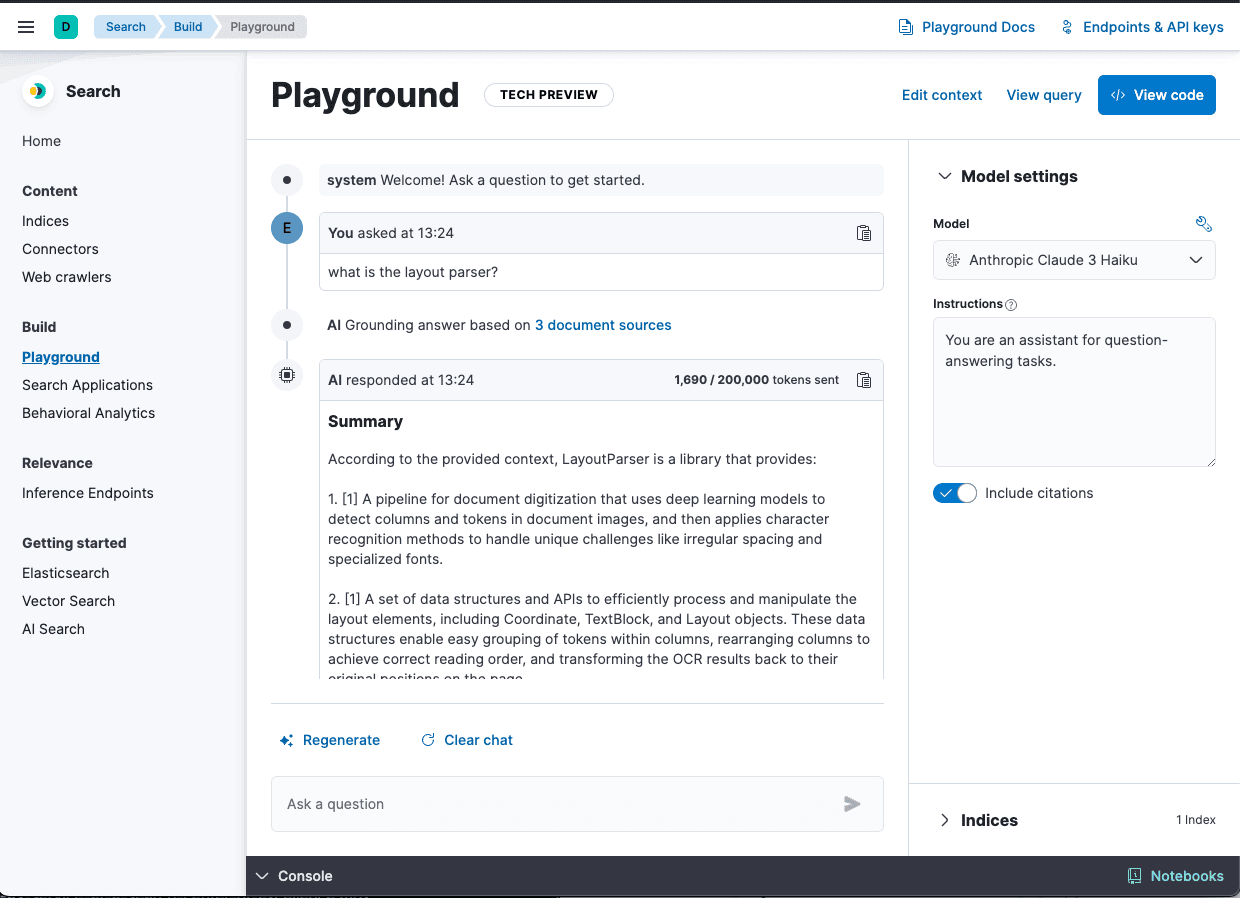

Once you have connected an LLM and chosen an Elasticsearch index, you can start asking questions about information in your index. The LLM will provide answers based on context from your data.

Connect an LLM of choice and Elasticsearch indices with private proprietary information

Instantly chat with your data and assess responses from models such as Anthropic Claude 3 Haiku in this example

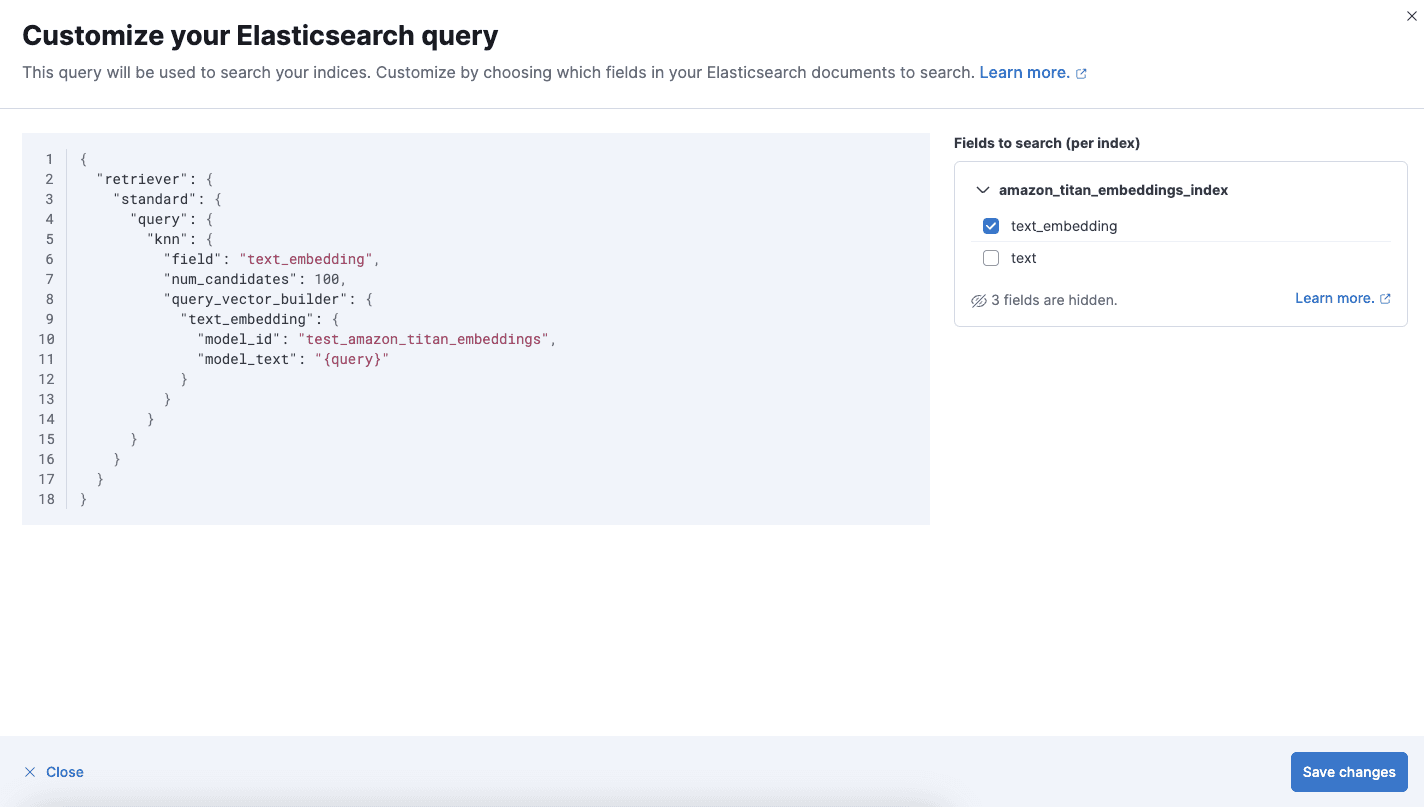

Review and customize text and retriever queries to indices that store vector embeddings

Using retrievers and hybrid search for the best context

Elastic’s hybrid search helps you build the best context windows. Effective context windows are built from various types of vectorized and plain text data that can be spread across multiple indices. Developers can now take advantage of new query retrievers to simplify query creation. Three new retrievers are available from version 8.14 and on Elastic Cloud Serverless, and implementing hybrid search normalized with RRF is one unified query away. You can store vectorized data and use a kNN retriever, or add metadata and context to create a hybrid search query. Soon, you can also add semantic reranking to further improve search results.

Use Playground to ship conversational search—quickly

Building conversational search experiences can involve many approaches, and the choices can be paralyzing, especially given the pace of innovation in new reranking and retrieval techniques, both of which apply to RAG applications.

With our playground, those choices are simplified and intuitive, even with the vast array of capabilities available to the developer. Our approach is unique in enabling hybrid search as a predominant pillar of the construction immediately, with an intuitive understanding of the shape of the selected and chunked data and amplified access across multiple external providers of LLMs.

Earlier this year, Elastic was awarded the AWS Generative AI Competency, a distinction given to very few AWS partners that provide differentiating generative AI tools. Elastic’s approach to adding Bedrock support for the playground experience is guided by the same principle – to bring new and innovative capabilities to Elastic Cloud on AWS developers.

Build, test, fun with Playground

Head over to Playground docs to get started today! Explore Search Labs on GitHub for new cookbooks and integrations for providers such as Cohere, Anthropic, Azure OpenAI, and more.

Frequently Asked Questions

What is Elastic's Playground?

Elastic's Playground is a low-code interface that enables developers to iterate and build production RAG applications by A/B testing LLMs, tuning prompts, and chunking data.