Elastic Search 8.15: Accessible semantic search with semantic text and reranking

In 8.15, great search results are even more accessible for our customers. Our latest release brings semantic reranking, additional vector search tools, and more third-party model providers and promotes our native Learning to Rank (LTR) to generally available. And now search is more performant than ever with additional speed and efficiency improvements.

Elastic Search 8.15 is available now on Elastic Cloud — the only hosted Elasticsearch offering to include all of the new features in this latest release. You can also download the Elastic Stack and our cloud orchestration products, Elastic Cloud Enterprise and Elastic Cloud on Kubernetes, for a self-managed experience.

What else is new in Elastic 8.15? Check out the 8.15 announcement post to learn more.

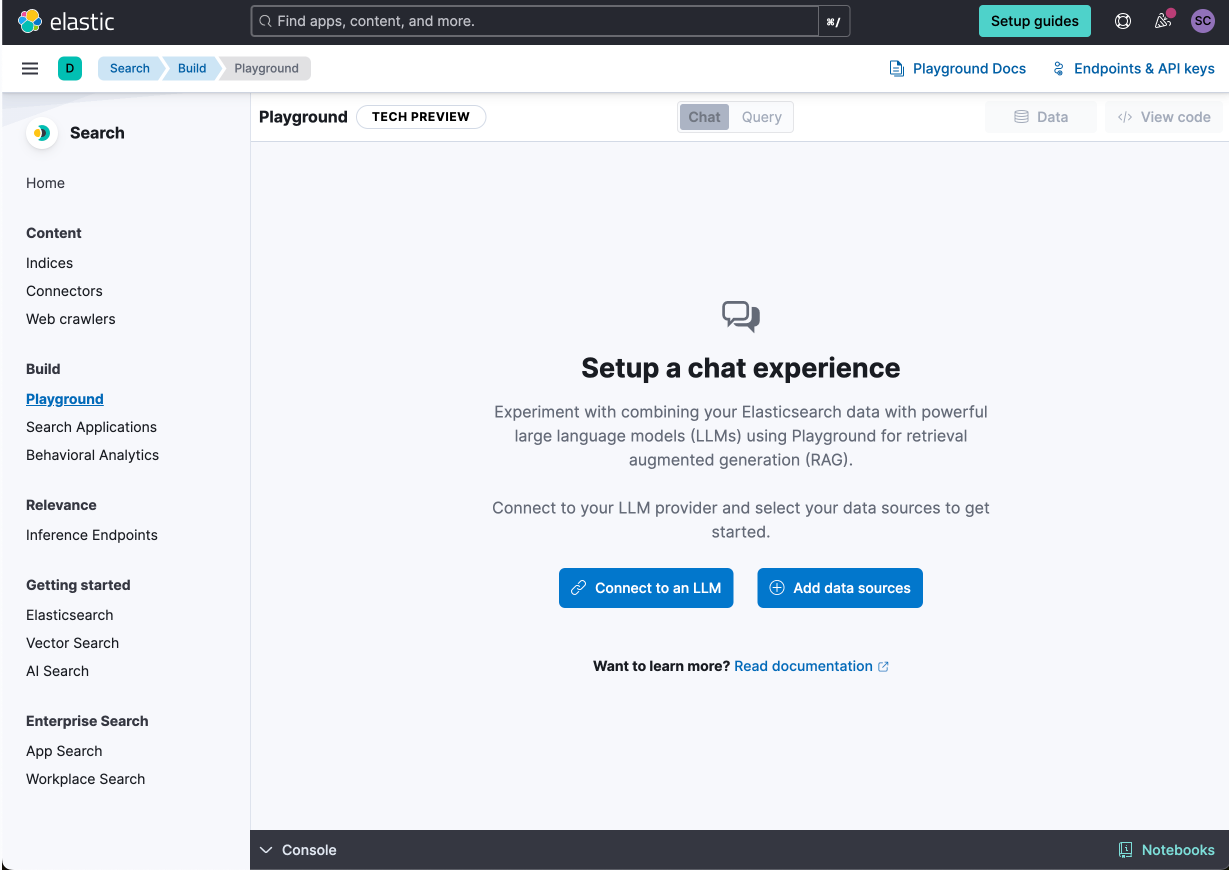

Semantic search at your fingertips

Elasticsearch 8.15 introduces semantic text and semantic reranking — two powerful ways to bring natural language search into your search experiences.

We continue to make vector search more accessible with semantic text — a new field type and corresponding semantic query type that unlocks a streamlined approach to vector search for text. In particular, semantic text handles automated chunking, so users with long text fields can rest easy knowing they have full multi-embedding coverage and handling done under the hood. Vector search customers can just set a semantic text field to get started with model coordination and embedding generation handled automatically by the inference API.

For users who want semantic search without vectors or to further fortify their vector or hybrid search, 8.15 also introduces semantic reranking. This feature can dramatically improve search results, especially natural language search, by applying powerful semantic similarity models to refine the Top N results of a search query. This addition is a potentially powerful improvement for any search system, but it's especially valuable for users seeking to improve natural language search capabilities without incorporating vector embeddings.

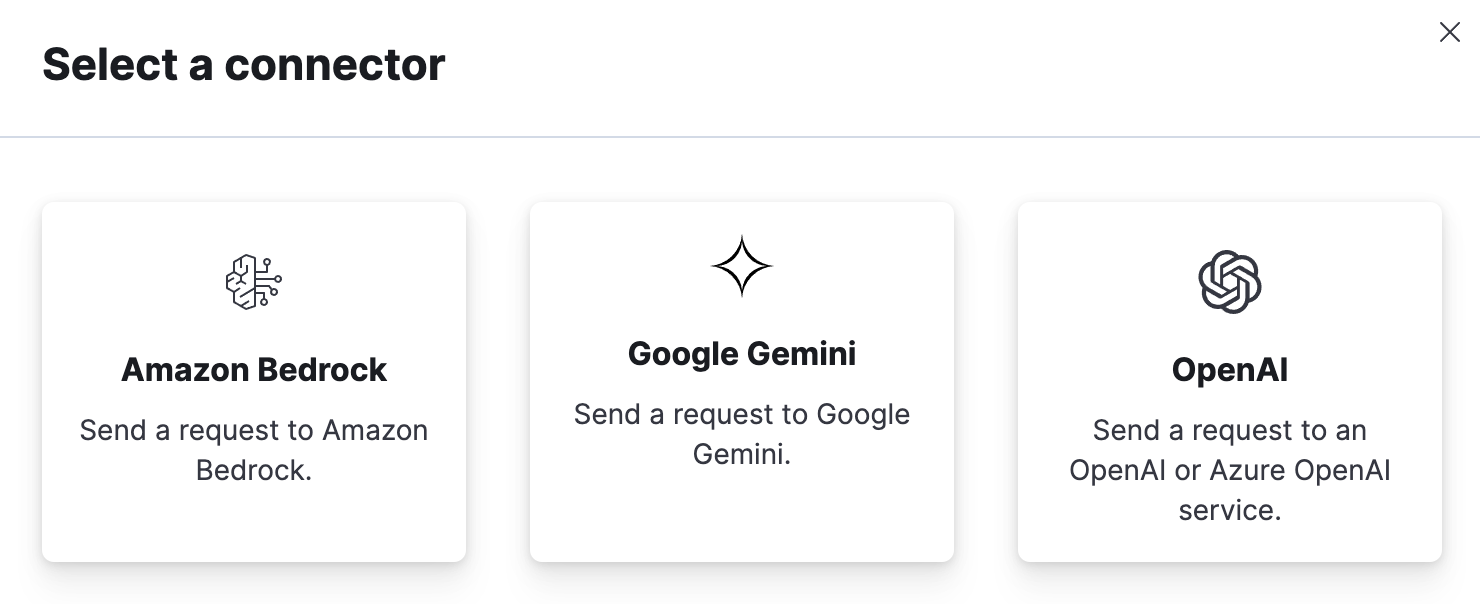

More model flexibility with additional third-party providers

Elasticsearch's open inference API continues to give our users even more model flexibility with additional third-party providers. 8.15 introduces:

Google AI Studio (embeddings and completions) and Vertex AI (embeddings and reranking)

Mistral (embeddings)

Amazon Bedrock (embeddings and completions)

Anthropic (completions)

All of these model providers are easily configured as an inference endpoint by providing a simple API key and then can be used with retrievers for simple and easy composability of the search query.

More tools to fine-tune relevance

Elasticsearch's native Learning to Rank and query rules features are generally available as of 8.15, offering users even finer control over their search relevance and results.

Learning to Rank is built directly into Elasticsearch and expands the search relevance tuning pipeline of our search users, so they can rerank results with an LTR model trained on the features that matter to them. This runs directly in Elasticsearch and enables more fine-tuned and context-aware search results that can adapt to complex search and user behavior patterns. And as of 8.15, you can also rescore collapsed results, which means you can use LTR to rerank your field collapsed results.

Query rules introduce the ability to apply business rules to the search set even with the use of machine learning models for hybrid search. Users can specify the desired documents to pin to the top of search results when the defined conditions are met — enabling search results that are tailored by query, user segment, and more, and it works with any combination of hybrid search and RRF.

- Playground now supports Google Gemini!

More vector search options, speed, and efficiency for customers

Scalar quantization improvements continue to balance excellent accuracy with even better efficiency, including int-4 quantization. There are also more vector search options with the new sparse vector query type, Hamming distance, and bit-encoded vector support. Read more about these and other vector database performance improvements in the Elasticsearch Platform highlights blog.

Try it out

Read about these capabilities and more in the release notes.

Existing Elastic Cloud customers can access many of these features directly from the Elastic Cloud console. Not taking advantage of Elastic on cloud? Start a free trial.

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

In this blog post, we may have used or referred to third party generative AI tools, which are owned and operated by their respective owners. Elastic does not have any control over the third party tools and we have no responsibility or liability for their content, operation or use, nor for any loss or damage that may arise from your use of such tools. Please exercise caution when using AI tools with personal, sensitive or confidential information. Any data you submit may be used for AI training or other purposes. There is no guarantee that information you provide will be kept secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Elastic, Elasticsearch, ESRE, Elasticsearch Relevance Engine and associated marks are trademarks, logos or registered trademarks of Elasticsearch N.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.