Relevance workbench

In this workbench, you can compare our Elastic Learned Sparse Encoder model (with or without RRF) and traditional textual search using BM25.

Start comparing different hybrid search techniques using TMDB's movies dataset as sample data. Or fork the code and ingest your own data to try it on your own!

Try these queries to get started:

- "The matrix"

- "Movies in Space"

- "Superhero animated movies"

Notice how some queries work great for both search techniques. For example, 'The Matrix' performs well with both models. However, for queries like "Superhero animated movies", the Elastic Learned Sparse Encoder model outperforms BM25. This can be attributed to the semantic search capabilities of the model.

Explore similar demos

Search

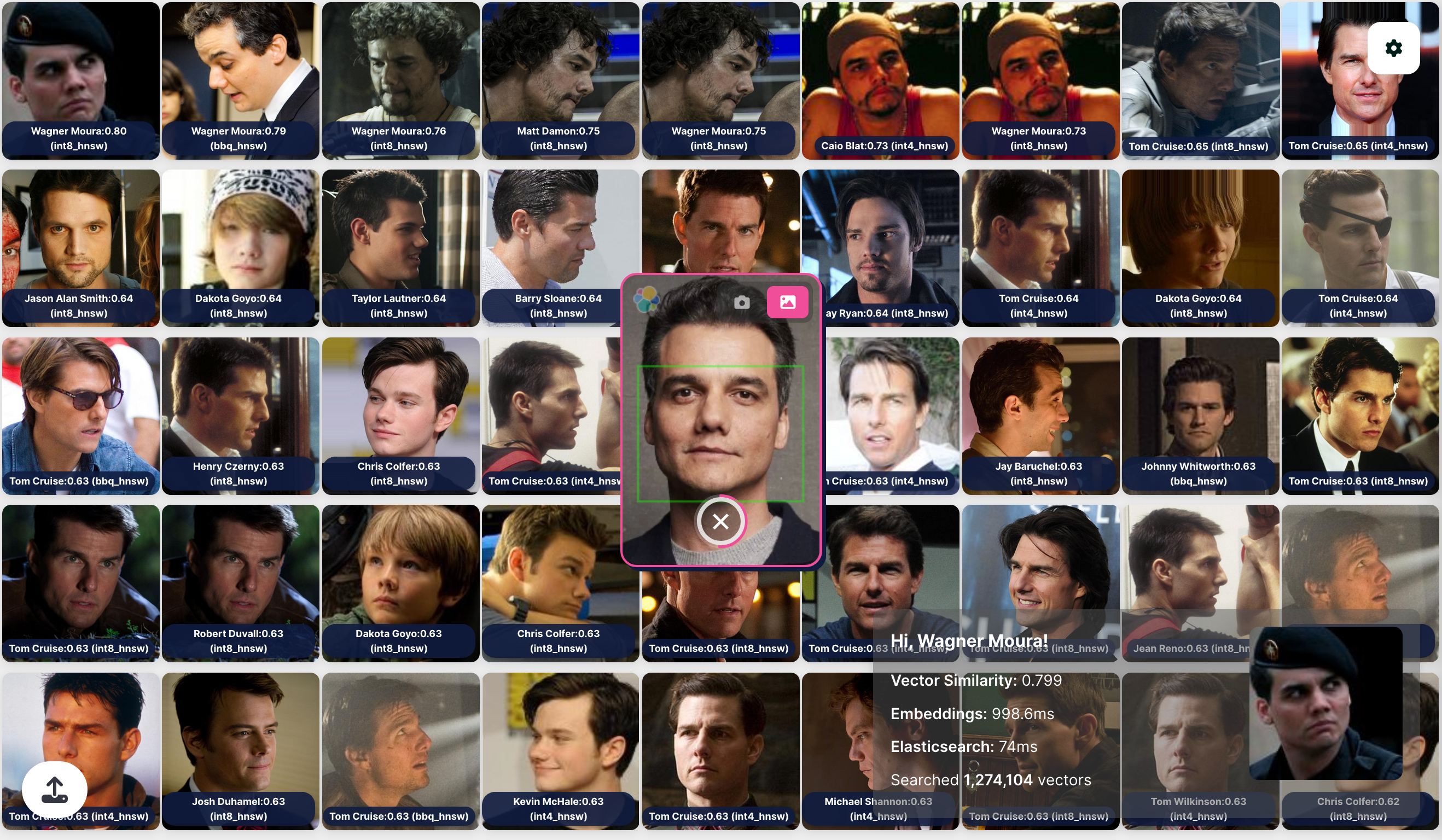

Vector Search - Celebrity Faces

Observability

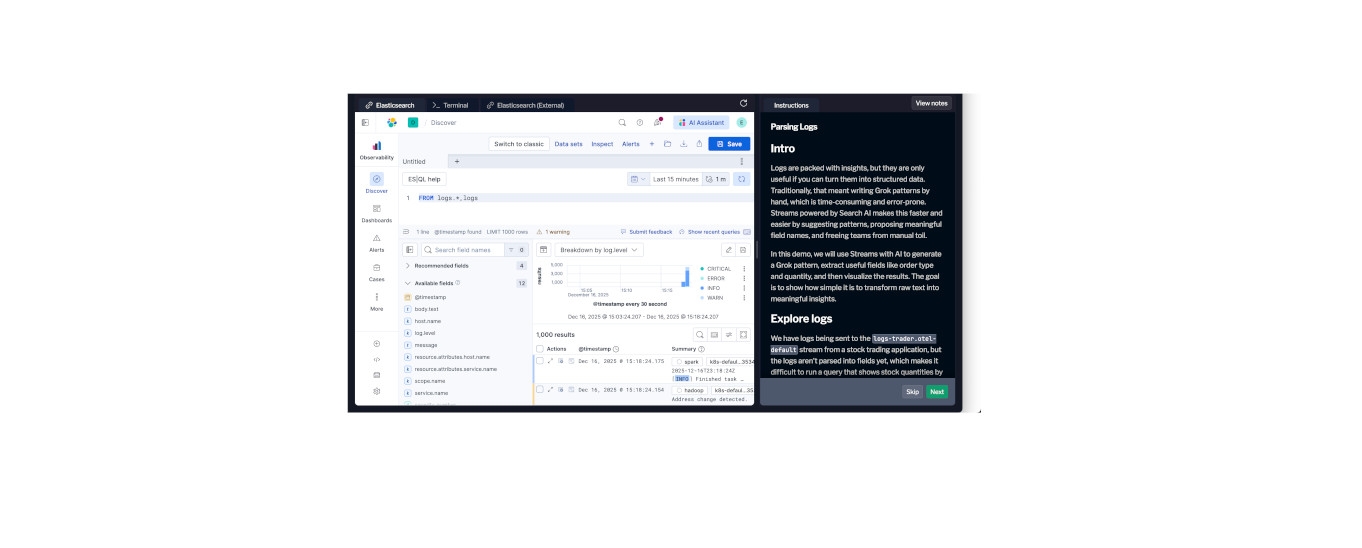

Working with Streams - Onboarding to Insights

In this hands-on workshop you will onboard new data utilizing Streams. Through the use of AI we will dynamically parse data, as well as showcase how to handle potential issues with data quality. Finally, you will learn how to easily detect events of interest while onboarding data.