Air-gapped ECK implementation: Strengthening DoD DevSecOps

In 2021, the Department of Defense (DoD) released a Strategy Guide to adopt a DevSecOps culture for deploying software at the "speed of relevance." Monitoring, alerting, auditing, and securing of CI/CD pipelines, applications, and infrastructure are crucial to the entire DevSecOps lifecycle, making Elastic® a powerful tool in your DevSecOps toolkit.

Many organizations in the DoD do not have direct access to the internet, which adds complexity and slows down the rate of deploying applications and Elastic. Kubernetes can help speed up deployments and remove the headache of transferring virtual machines in an air-gapped environment. But how can we run Elastic Cloud on Kubernetes (ECK) in these air-gapped environments?

Challenges of implementing Kubernetes in the DoD

OK, so Kubernetes can help us speed up deployments and adopt the DevSecOps culture, so let’s spin up any cluster and run ECK, right?

For organizations in the DoD, absolutely not. But everyone else, IT Depends.

The DoD has many policies and procedures, like the Risk Mitigation Framework, to get infrastructure or software into production, so using just any operating system, container, cloud service provider (CSP), or Kubernetes cluster is not feasible.

Securing applications or infrastructure to these standards is no easy process; it takes a lot of time and people to build everything from the ground up and stay compliant. When deploying the Elastic Stack in this environment, there are some solutions to this problem, like Elastic’s FedRAMP authorized cloud offering or deploying Elastic to virtual machines in your CSP’s government environment. However, most organizations will be on-premise, so how do you adopt the DevSecOps culture, stay compliant, and deploy the Elastic Stack without building everything from the ground up?

Deploy the hardened Elastic images from Platform One's Iron Bank into a DISA STIG certified RKE2 Kubernetes cluster.

Environment setup

All the source code can be found here if you would like to follow along.

This environment is in GCP using the RHEL 8 STIG image with one private container registry, one load balancer, three RKE2 server nodes, and five RKE2 agent nodes.

Deployment

To set up the private container registry, the images, and RKE2 cluster, we are using a combination of Terraform and Ansible for the deployment.

Note: This deployment does take over an hour to deploy since there are a lot of large Docker images to download and build.

To configure the environment complete the following steps which can be found here:

Ensure you have your Google credentials per Terraform’s instructions.

Create a ssh key with a password.

Grab your MaxMind license.

Update auto.tfvars with your variables.

Deploy the infrastructure with Terraform.

Deploy RKE2 with Ansible.

Container images

After you have a private container registry and PKI, we will need to download all the hardened images to that registry. The deployment from above already uploaded all the required images into the registry. However, in an air-gapped environment, this will most likely be done on a separate device with internet access where you will move the images to a hard drive to be transferred to the air-gapped environment.

All the required images can be found here. If using Iron Bank images, be sure to replace the Elastic images with those.

Although most of the images can be downloaded, there are a few images that you will need in an air-gapped environment that must be built first. Those include:

- GeoIP

- Elastic Artifact Registry (EAR)

- Elastic Endpoint Artifact Repository (EER)

- Elastic Learned Sparse EncodeR (ELSER)

An example script to download the required files and Dockerfile can be found in their respective directories. Once you have those built, it's time to upload them into your private container registry!

To move the containers to your private registry, you can use skopeo to add all your images to your private registry or use some bash scripting.

On a system with connection to the internet:

for i in $(cat images.txt); do

version=$(echo $i | cut -d'/' -f3)

image=$(echo $version | cut -d':' -f1)

podman pull $i

podman save -o package-$image.tar $i

done

In the air-gapped environment with all the saved images:

for i in $(cat images.txt); do

version=$(echo $i | cut -d'/' -f3)

image=$(echo $version | cut -d':' -f1)

package=$(echo $i | cut -d'/' -f2)

podman load -i package-$image.tar

podman tag $i rke2-airgap-registry:5000/$package/$version

podman push rke2-airgap-registry:5000/$package/$version

done

Now that we have our RKE2 cluster and images loaded into the private container registry, it is time to deploy ECK.

ECK

Elastic already has many blogs documenting how to deploy ECK. Be sure to check these out if you are not in an air-gapped environment.

- A simplified stack monitoring experience in Elastic Cloud on Kubernetes

- Getting started with Elastic Cloud on Kubernetes: Deployment

- Getting started with Elastic Cloud on Kubernetes: Data ingestion

- ECK in production environment

- Using ECK with helm

Unlike the other ECK deployments, there are some prerequisites we must do before deploying ECK since we do not have direct access to the internet.

Elastic Package Registry

The Elastic Package Registry is an online package hosting service for the Elastic Agent integrations available in Kibana®. Elastic already provides a container hosting all the packages, so it's easy to deploy with this.

Elastic Artifact Registry

The Elastic Artifact Registry (EAR) is an online package hosting service for Elastic Agent binaries used for upgrades. To reap all the benefits of Elastic Agent, you need to easily upgrade your agents upon a new release through Fleet. Hosting your own EAR allows Fleet to grab the binary and upgrade the Agent to the newest version. We already built this container and uploaded it to our registry, so deploy it with this.

Elastic Endpoint Artifact Repository

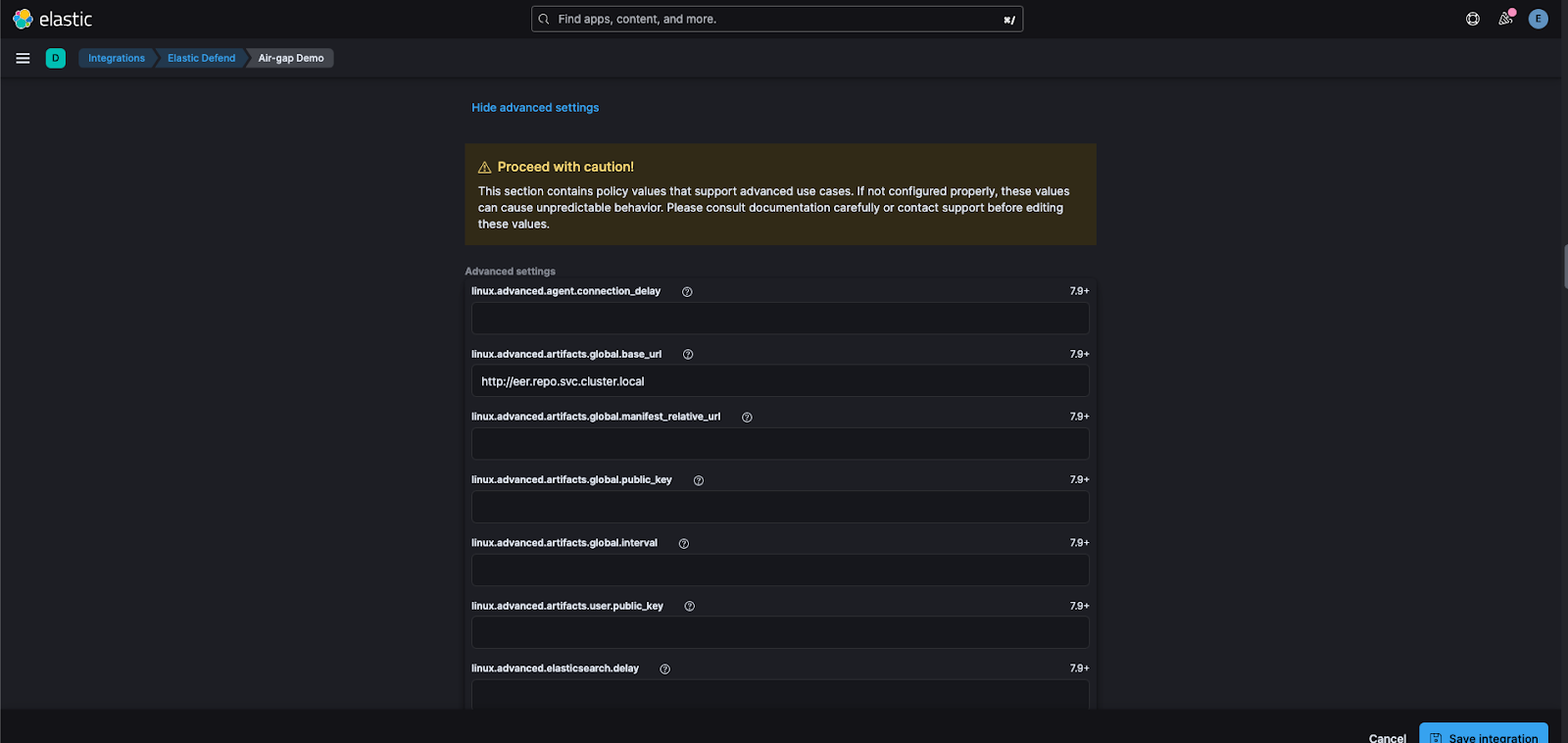

Similar to the Elastic Artifact Registry, the Elastic Endpoint Artifact Repository is an online package hosting service for Elastic Defend to grab artifacts to defend against the latest threats. If not using Elastic Defend feel free to skip this step or deploy it here.

Elastic Learned Sparse EncodeR

Read our blog on how the government and public sector can benefit from generative AI. To deploy your own trained models, follow this guide for Eland. However, if you would like to use ELSER, we already uploaded the image to our container registry and you can deploy it here.

GeoIP

Finally, Elastic uses databases from MaxMind to add information about the geographical location of IP addresses. We created our own GeoIP endpoint and uploaded it to our container registry already, and it can be deployed here.

Security

Although deploying an RKE2 cluster on a hardened image does help with security, there are some other Kubernetes configurations that should be taken into consideration when deploying ECK. This demo does not go in depth on securing your Kubernetes environment; however, here are some items to consider:

- SELinux

- Pod Security Admission

- Network Policies

- Security Context

- You will need to update the security context for the operator and any container or init container that is not compliant with your namespaces.

- Elastic Agent must run as root and be in the same namespace as your Elasticsearch cluster.

- If this is not an option for your organization, it is recommended to stand up a Fleet server outside of ECK.

- FIPS Compliant Images

Deployment

Now that all of our prerequisites are deployed, we can start the deployment of ECK.

1. Deploy cert-manager for ingress certificates.2. Deploy longhorn for storage.

3. Deploy the ECK CRDs and operator.

- Ensure you update the operator’s security context to match your environment’s policies and the private container registry address in the operator.yaml.

- We need to bootstrap the operator with the path to our container registry — all other images do not need to be updated since we already added that to our config map.

5. Deploy the namespaces.

6. Deploy the monitoring cluster.

7. Deploy the production cluster.

- Note that we update the Elasticsearch config with the URL to the ELSER and GeoIP service.

config:

xpack.ml.model_repository: http://elser.repo.svc.cluster.local

ingest.geoip.downloader.endpoint: http://geoip-mirror.repo.svc.cluster.local

- We also update the Kibana config to point to the EPR service and maps ingress URL, as well as updating the Fleet packages.

config:

map.emsUrl: "https://maps.air-gap.demo"

xpack.fleet.registryUrl: "http://elastic-package-registry.repo.svc.cluster.local"8. Deploy the Fleet server (wait for the production cluster to be ready).

9. Deploy the maps server.

10. Browser to https://prod.air-gap.demo/

kubectl get secret elasticsearch-es-elastic-user -n prod -o=jsonpath='{.data.elastic}' | base64 --decode; echo

echo "<your_lb_external_ip> longhorn.air-gap.demo monitor.air-gap.demo prod.air-gap.demo maps.air-gap.demo" >> /etc/hosts

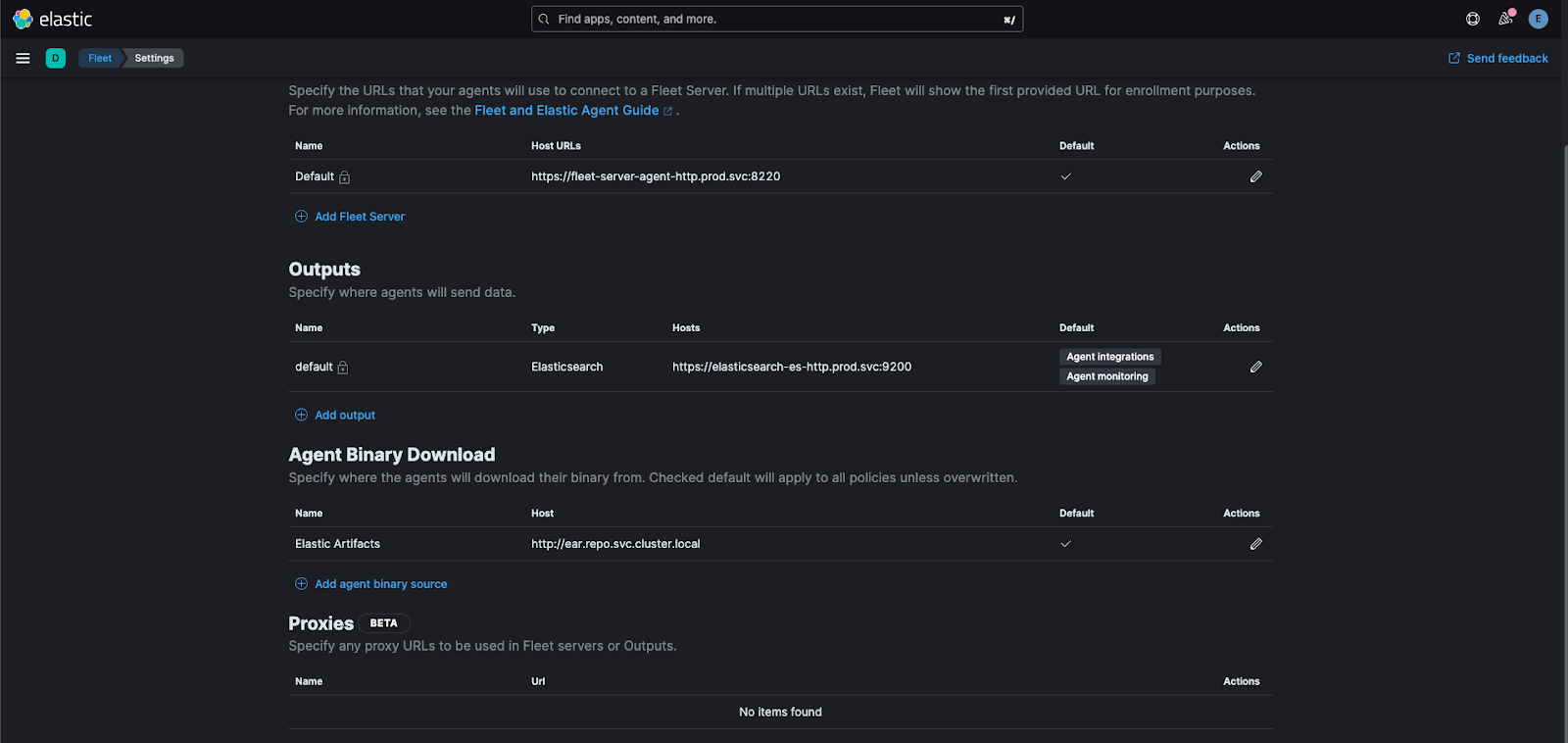

11. Update the path to the Elastic Artifact Registry.

12. Update the path to the Elastic Endpoint Artifact Repository.

Observe and secure your environment with ECK

You can deploy ECK in an air-gapped environment with all of its dependencies and stay compliant to achieve your organization's goals of a software factory, observability, and/or security! If you are not using ECK for your air-gapped environment, be sure to check our documentation for self-managed Linux installation in air-gapped environments.

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.