- Elasticsearch Guide: other versions:

- What is Elasticsearch?

- What’s new in 8.11

- Quick start

- Set up Elasticsearch

- Installing Elasticsearch

- Run Elasticsearch locally

- Configuring Elasticsearch

- Important Elasticsearch configuration

- Secure settings

- Auditing settings

- Circuit breaker settings

- Cluster-level shard allocation and routing settings

- Miscellaneous cluster settings

- Cross-cluster replication settings

- Discovery and cluster formation settings

- Field data cache settings

- Health Diagnostic settings

- Index lifecycle management settings

- Data stream lifecycle settings

- Index management settings

- Index recovery settings

- Indexing buffer settings

- License settings

- Local gateway settings

- Logging

- Machine learning settings

- Monitoring settings

- Node

- Networking

- Node query cache settings

- Search settings

- Security settings

- Shard request cache settings

- Snapshot and restore settings

- Transforms settings

- Thread pools

- Watcher settings

- Advanced configuration

- Important system configuration

- Bootstrap Checks

- Heap size check

- File descriptor check

- Memory lock check

- Maximum number of threads check

- Max file size check

- Maximum size virtual memory check

- Maximum map count check

- Client JVM check

- Use serial collector check

- System call filter check

- OnError and OnOutOfMemoryError checks

- Early-access check

- All permission check

- Discovery configuration check

- Bootstrap Checks for X-Pack

- Starting Elasticsearch

- Stopping Elasticsearch

- Discovery and cluster formation

- Add and remove nodes in your cluster

- Full-cluster restart and rolling restart

- Remote clusters

- Plugins

- Upgrade Elasticsearch

- Index modules

- Mapping

- Text analysis

- Overview

- Concepts

- Configure text analysis

- Built-in analyzer reference

- Tokenizer reference

- Token filter reference

- Apostrophe

- ASCII folding

- CJK bigram

- CJK width

- Classic

- Common grams

- Conditional

- Decimal digit

- Delimited payload

- Dictionary decompounder

- Edge n-gram

- Elision

- Fingerprint

- Flatten graph

- Hunspell

- Hyphenation decompounder

- Keep types

- Keep words

- Keyword marker

- Keyword repeat

- KStem

- Length

- Limit token count

- Lowercase

- MinHash

- Multiplexer

- N-gram

- Normalization

- Pattern capture

- Pattern replace

- Phonetic

- Porter stem

- Predicate script

- Remove duplicates

- Reverse

- Shingle

- Snowball

- Stemmer

- Stemmer override

- Stop

- Synonym

- Synonym graph

- Trim

- Truncate

- Unique

- Uppercase

- Word delimiter

- Word delimiter graph

- Character filters reference

- Normalizers

- Index templates

- Data streams

- Ingest pipelines

- Example: Parse logs

- Enrich your data

- Processor reference

- Append

- Attachment

- Bytes

- Circle

- Community ID

- Convert

- CSV

- Date

- Date index name

- Dissect

- Dot expander

- Drop

- Enrich

- Fail

- Fingerprint

- Foreach

- Geo-grid

- GeoIP

- Grok

- Gsub

- HTML strip

- Inference

- Join

- JSON

- KV

- Lowercase

- Network direction

- Pipeline

- Redact

- Registered domain

- Remove

- Rename

- Reroute

- Script

- Set

- Set security user

- Sort

- Split

- Trim

- Uppercase

- URL decode

- URI parts

- User agent

- Ingest pipelines in Search

- Aliases

- Search your data

- Query DSL

- Aggregations

- Bucket aggregations

- Adjacency matrix

- Auto-interval date histogram

- Categorize text

- Children

- Composite

- Date histogram

- Date range

- Diversified sampler

- Filter

- Filters

- Frequent item sets

- Geo-distance

- Geohash grid

- Geohex grid

- Geotile grid

- Global

- Histogram

- IP prefix

- IP range

- Missing

- Multi Terms

- Nested

- Parent

- Random sampler

- Range

- Rare terms

- Reverse nested

- Sampler

- Significant terms

- Significant text

- Terms

- Time series

- Variable width histogram

- Subtleties of bucketing range fields

- Metrics aggregations

- Pipeline aggregations

- Average bucket

- Bucket script

- Bucket count K-S test

- Bucket correlation

- Bucket selector

- Bucket sort

- Change point

- Cumulative cardinality

- Cumulative sum

- Derivative

- Extended stats bucket

- Inference bucket

- Max bucket

- Min bucket

- Moving function

- Moving percentiles

- Normalize

- Percentiles bucket

- Serial differencing

- Stats bucket

- Sum bucket

- Bucket aggregations

- Geospatial analysis

- EQL

- ES|QL

- SQL

- Overview

- Getting Started with SQL

- Conventions and Terminology

- Security

- SQL REST API

- SQL Translate API

- SQL CLI

- SQL JDBC

- SQL ODBC

- SQL Client Applications

- SQL Language

- Functions and Operators

- Comparison Operators

- Logical Operators

- Math Operators

- Cast Operators

- LIKE and RLIKE Operators

- Aggregate Functions

- Grouping Functions

- Date/Time and Interval Functions and Operators

- Full-Text Search Functions

- Mathematical Functions

- String Functions

- Type Conversion Functions

- Geo Functions

- Conditional Functions And Expressions

- System Functions

- Reserved keywords

- SQL Limitations

- Scripting

- Data management

- ILM: Manage the index lifecycle

- Tutorial: Customize built-in policies

- Tutorial: Automate rollover

- Index management in Kibana

- Overview

- Concepts

- Index lifecycle actions

- Configure a lifecycle policy

- Migrate index allocation filters to node roles

- Troubleshooting index lifecycle management errors

- Start and stop index lifecycle management

- Manage existing indices

- Skip rollover

- Restore a managed data stream or index

- Data tiers

- Autoscaling

- Monitor a cluster

- Roll up or transform your data

- Set up a cluster for high availability

- Snapshot and restore

- Secure the Elastic Stack

- Elasticsearch security principles

- Start the Elastic Stack with security enabled automatically

- Manually configure security

- Updating node security certificates

- User authentication

- Built-in users

- Service accounts

- Internal users

- Token-based authentication services

- User profiles

- Realms

- Realm chains

- Security domains

- Active Directory user authentication

- File-based user authentication

- LDAP user authentication

- Native user authentication

- OpenID Connect authentication

- PKI user authentication

- SAML authentication

- Kerberos authentication

- JWT authentication

- Integrating with other authentication systems

- Enabling anonymous access

- Looking up users without authentication

- Controlling the user cache

- Configuring SAML single-sign-on on the Elastic Stack

- Configuring single sign-on to the Elastic Stack using OpenID Connect

- User authorization

- Built-in roles

- Defining roles

- Role restriction

- Security privileges

- Document level security

- Field level security

- Granting privileges for data streams and aliases

- Mapping users and groups to roles

- Setting up field and document level security

- Submitting requests on behalf of other users

- Configuring authorization delegation

- Customizing roles and authorization

- Enable audit logging

- Restricting connections with IP filtering

- Securing clients and integrations

- Operator privileges

- Troubleshooting

- Some settings are not returned via the nodes settings API

- Authorization exceptions

- Users command fails due to extra arguments

- Users are frequently locked out of Active Directory

- Certificate verification fails for curl on Mac

- SSLHandshakeException causes connections to fail

- Common SSL/TLS exceptions

- Common Kerberos exceptions

- Common SAML issues

- Internal Server Error in Kibana

- Setup-passwords command fails due to connection failure

- Failures due to relocation of the configuration files

- Limitations

- Watcher

- Command line tools

- elasticsearch-certgen

- elasticsearch-certutil

- elasticsearch-create-enrollment-token

- elasticsearch-croneval

- elasticsearch-keystore

- elasticsearch-node

- elasticsearch-reconfigure-node

- elasticsearch-reset-password

- elasticsearch-saml-metadata

- elasticsearch-service-tokens

- elasticsearch-setup-passwords

- elasticsearch-shard

- elasticsearch-syskeygen

- elasticsearch-users

- How to

- Troubleshooting

- Fix common cluster issues

- Diagnose unassigned shards

- Add a missing tier to the system

- Allow Elasticsearch to allocate the data in the system

- Allow Elasticsearch to allocate the index

- Indices mix index allocation filters with data tiers node roles to move through data tiers

- Not enough nodes to allocate all shard replicas

- Total number of shards for an index on a single node exceeded

- Total number of shards per node has been reached

- Troubleshooting corruption

- Fix data nodes out of disk

- Fix master nodes out of disk

- Fix other role nodes out of disk

- Start index lifecycle management

- Start Snapshot Lifecycle Management

- Restore from snapshot

- Multiple deployments writing to the same snapshot repository

- Addressing repeated snapshot policy failures

- Troubleshooting an unstable cluster

- Troubleshooting discovery

- Troubleshooting monitoring

- Troubleshooting transforms

- Troubleshooting Watcher

- Troubleshooting searches

- Troubleshooting shards capacity health issues

- REST APIs

- API conventions

- Common options

- REST API compatibility

- Autoscaling APIs

- Behavioral Analytics APIs

- Compact and aligned text (CAT) APIs

- cat aliases

- cat allocation

- cat anomaly detectors

- cat component templates

- cat count

- cat data frame analytics

- cat datafeeds

- cat fielddata

- cat health

- cat indices

- cat master

- cat nodeattrs

- cat nodes

- cat pending tasks

- cat plugins

- cat recovery

- cat repositories

- cat segments

- cat shards

- cat snapshots

- cat task management

- cat templates

- cat thread pool

- cat trained model

- cat transforms

- Cluster APIs

- Cluster allocation explain

- Cluster get settings

- Cluster health

- Health

- Cluster reroute

- Cluster state

- Cluster stats

- Cluster update settings

- Nodes feature usage

- Nodes hot threads

- Nodes info

- Prevalidate node removal

- Nodes reload secure settings

- Nodes stats

- Cluster Info

- Pending cluster tasks

- Remote cluster info

- Task management

- Voting configuration exclusions

- Create or update desired nodes

- Get desired nodes

- Delete desired nodes

- Get desired balance

- Reset desired balance

- Cross-cluster replication APIs

- Data stream APIs

- Document APIs

- Enrich APIs

- EQL APIs

- ES|QL query API

- Features APIs

- Fleet APIs

- Find structure API

- Graph explore API

- Index APIs

- Alias exists

- Aliases

- Analyze

- Analyze index disk usage

- Clear cache

- Clone index

- Close index

- Create index

- Create or update alias

- Create or update component template

- Create or update index template

- Create or update index template (legacy)

- Delete component template

- Delete dangling index

- Delete alias

- Delete index

- Delete index template

- Delete index template (legacy)

- Exists

- Field usage stats

- Flush

- Force merge

- Get alias

- Get component template

- Get field mapping

- Get index

- Get index settings

- Get index template

- Get index template (legacy)

- Get mapping

- Import dangling index

- Index recovery

- Index segments

- Index shard stores

- Index stats

- Index template exists (legacy)

- List dangling indices

- Open index

- Refresh

- Resolve index

- Rollover

- Shrink index

- Simulate index

- Simulate template

- Split index

- Unfreeze index

- Update index settings

- Update mapping

- Index lifecycle management APIs

- Create or update lifecycle policy

- Get policy

- Delete policy

- Move to step

- Remove policy

- Retry policy

- Get index lifecycle management status

- Explain lifecycle

- Start index lifecycle management

- Stop index lifecycle management

- Migrate indices, ILM policies, and legacy, composable and component templates to data tiers routing

- Inference APIs

- Info API

- Ingest APIs

- Licensing APIs

- Logstash APIs

- Machine learning APIs

- Machine learning anomaly detection APIs

- Add events to calendar

- Add jobs to calendar

- Close jobs

- Create jobs

- Create calendars

- Create datafeeds

- Create filters

- Delete calendars

- Delete datafeeds

- Delete events from calendar

- Delete filters

- Delete forecasts

- Delete jobs

- Delete jobs from calendar

- Delete model snapshots

- Delete expired data

- Estimate model memory

- Flush jobs

- Forecast jobs

- Get buckets

- Get calendars

- Get categories

- Get datafeeds

- Get datafeed statistics

- Get influencers

- Get jobs

- Get job statistics

- Get model snapshots

- Get model snapshot upgrade statistics

- Get overall buckets

- Get scheduled events

- Get filters

- Get records

- Open jobs

- Post data to jobs

- Preview datafeeds

- Reset jobs

- Revert model snapshots

- Start datafeeds

- Stop datafeeds

- Update datafeeds

- Update filters

- Update jobs

- Update model snapshots

- Upgrade model snapshots

- Machine learning data frame analytics APIs

- Create data frame analytics jobs

- Delete data frame analytics jobs

- Evaluate data frame analytics

- Explain data frame analytics

- Get data frame analytics jobs

- Get data frame analytics jobs stats

- Preview data frame analytics

- Start data frame analytics jobs

- Stop data frame analytics jobs

- Update data frame analytics jobs

- Machine learning trained model APIs

- Clear trained model deployment cache

- Create or update trained model aliases

- Create part of a trained model

- Create trained models

- Create trained model vocabulary

- Delete trained model aliases

- Delete trained models

- Get trained models

- Get trained models stats

- Infer trained model

- Start trained model deployment

- Stop trained model deployment

- Update trained model deployment

- Migration APIs

- Node lifecycle APIs

- Query rules APIs

- Reload search analyzers API

- Repositories metering APIs

- Rollup APIs

- Script APIs

- Search APIs

- Search Application APIs

- Searchable snapshots APIs

- Security APIs

- Authenticate

- Change passwords

- Clear cache

- Clear roles cache

- Clear privileges cache

- Clear API key cache

- Clear service account token caches

- Create API keys

- Create or update application privileges

- Create or update role mappings

- Create or update roles

- Create or update users

- Create service account tokens

- Delegate PKI authentication

- Delete application privileges

- Delete role mappings

- Delete roles

- Delete service account token

- Delete users

- Disable users

- Enable users

- Enroll Kibana

- Enroll node

- Get API key information

- Get application privileges

- Get builtin privileges

- Get role mappings

- Get roles

- Get service accounts

- Get service account credentials

- Get token

- Get user privileges

- Get users

- Grant API keys

- Has privileges

- Invalidate API key

- Invalidate token

- OpenID Connect prepare authentication

- OpenID Connect authenticate

- OpenID Connect logout

- Query API key information

- Update API key

- Bulk update API keys

- SAML prepare authentication

- SAML authenticate

- SAML logout

- SAML invalidate

- SAML complete logout

- SAML service provider metadata

- SSL certificate

- Activate user profile

- Disable user profile

- Enable user profile

- Get user profiles

- Suggest user profile

- Update user profile data

- Has privileges user profile

- Create Cross-Cluster API key

- Update Cross-Cluster API key

- Snapshot and restore APIs

- Snapshot lifecycle management APIs

- SQL APIs

- Synonyms APIs

- Transform APIs

- Usage API

- Watcher APIs

- Definitions

- Migration guide

- Release notes

- Elasticsearch version 8.11.4

- Elasticsearch version 8.11.3

- Elasticsearch version 8.11.2

- Elasticsearch version 8.11.1

- Elasticsearch version 8.11.0

- Elasticsearch version 8.10.4

- Elasticsearch version 8.10.3

- Elasticsearch version 8.10.2

- Elasticsearch version 8.10.1

- Elasticsearch version 8.10.0

- Elasticsearch version 8.9.2

- Elasticsearch version 8.9.1

- Elasticsearch version 8.9.0

- Elasticsearch version 8.8.2

- Elasticsearch version 8.8.1

- Elasticsearch version 8.8.0

- Elasticsearch version 8.7.1

- Elasticsearch version 8.7.0

- Elasticsearch version 8.6.2

- Elasticsearch version 8.6.1

- Elasticsearch version 8.6.0

- Elasticsearch version 8.5.3

- Elasticsearch version 8.5.2

- Elasticsearch version 8.5.1

- Elasticsearch version 8.5.0

- Elasticsearch version 8.4.3

- Elasticsearch version 8.4.2

- Elasticsearch version 8.4.1

- Elasticsearch version 8.4.0

- Elasticsearch version 8.3.3

- Elasticsearch version 8.3.2

- Elasticsearch version 8.3.1

- Elasticsearch version 8.3.0

- Elasticsearch version 8.2.3

- Elasticsearch version 8.2.2

- Elasticsearch version 8.2.1

- Elasticsearch version 8.2.0

- Elasticsearch version 8.1.3

- Elasticsearch version 8.1.2

- Elasticsearch version 8.1.1

- Elasticsearch version 8.1.0

- Elasticsearch version 8.0.1

- Elasticsearch version 8.0.0

- Elasticsearch version 8.0.0-rc2

- Elasticsearch version 8.0.0-rc1

- Elasticsearch version 8.0.0-beta1

- Elasticsearch version 8.0.0-alpha2

- Elasticsearch version 8.0.0-alpha1

- Dependencies and versions

Example: Parse logs in the Common Log Format

editExample: Parse logs in the Common Log Format

editIn this example tutorial, you’ll use an ingest pipeline to parse server logs in the Common Log Format before indexing. Before starting, check the prerequisites for ingest pipelines.

The logs you want to parse look similar to this:

212.87.37.154 - - [05/May/2099:16:21:15 +0000] "GET /favicon.ico HTTP/1.1" 200 3638 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36"

These logs contain a timestamp, IP address, and user agent. You want to give these three items their own field in Elasticsearch for faster searches and visualizations. You also want to know where the request is coming from.

-

In Kibana, open the main menu and click Stack Management > Ingest Pipelines.

- Click Create pipeline > New pipeline.

-

Set Name to

my-pipelineand optionally add a description for the pipeline. -

Add a grok processor to parse the log message:

- Click Add a processor and select the Grok processor type.

-

Set Field to

messageand Patterns to the following grok pattern:%{IPORHOST:source.ip} %{USER:user.id} %{USER:user.name} \[%{HTTPDATE:@timestamp}\] "%{WORD:http.request.method} %{DATA:url.original} HTTP/%{NUMBER:http.version}" %{NUMBER:http.response.status_code:int} (?:-|%{NUMBER:http.response.body.bytes:int}) %{QS:http.request.referrer} %{QS:user_agent}

- Click Add to save the processor.

-

Set the processor description to

Extract fields from 'message'.

-

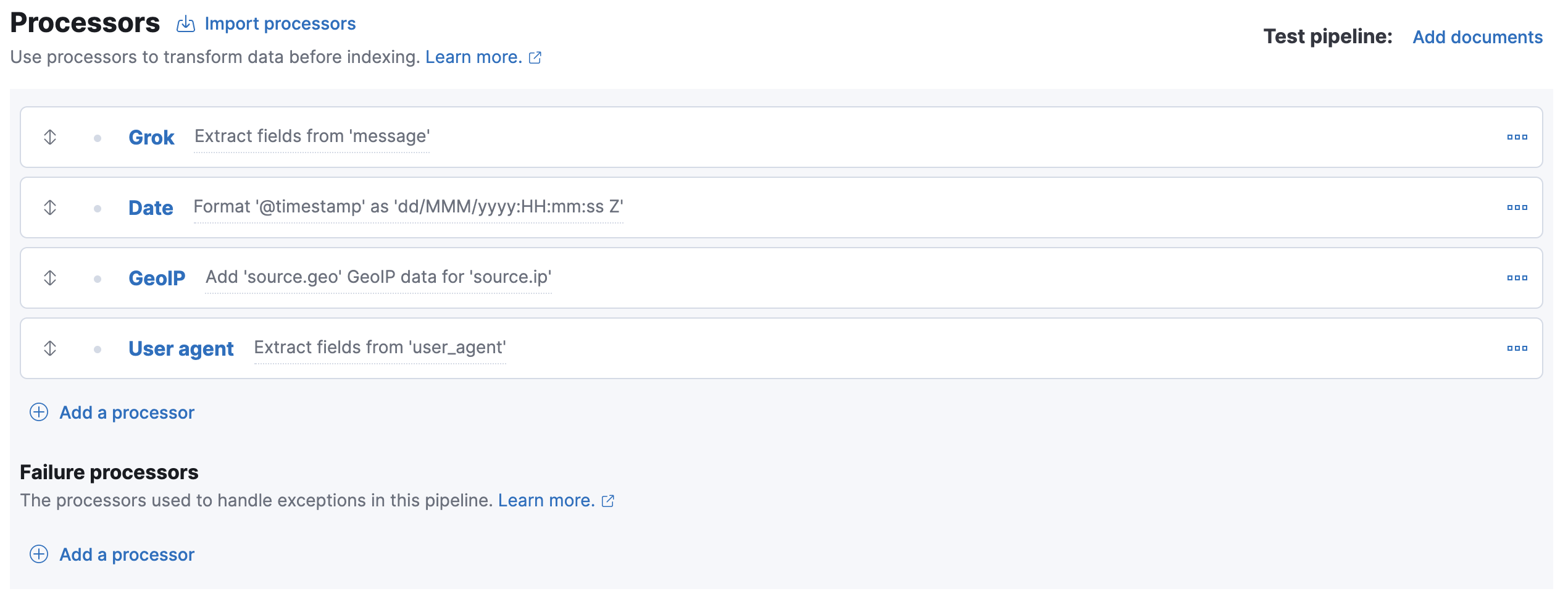

Add processors for the timestamp, IP address, and user agent fields. Configure the processors as follows:

Processor type Field Additional options Description @timestampFormats:

dd/MMM/yyyy:HH:mm:ss ZFormat '@timestamp' as 'dd/MMM/yyyy:HH:mm:ss Z'source.ipTarget field:

source.geoAdd 'source.geo' GeoIP data for 'source.ip'user_agentExtract fields from 'user_agent'Your form should look similar to this:

The four processors will run sequentially:

Grok > Date > GeoIP > User agent

You can reorder processors using the arrow icons.Alternatively, you can click the Import processors link and define the processors as JSON:

{ "processors": [ { "grok": { "description": "Extract fields from 'message'", "field": "message", "patterns": ["%{IPORHOST:source.ip} %{USER:user.id} %{USER:user.name} \\[%{HTTPDATE:@timestamp}\\] \"%{WORD:http.request.method} %{DATA:url.original} HTTP/%{NUMBER:http.version}\" %{NUMBER:http.response.status_code:int} (?:-|%{NUMBER:http.response.body.bytes:int}) %{QS:http.request.referrer} %{QS:user_agent}"] } }, { "date": { "description": "Format '@timestamp' as 'dd/MMM/yyyy:HH:mm:ss Z'", "field": "@timestamp", "formats": [ "dd/MMM/yyyy:HH:mm:ss Z" ] } }, { "geoip": { "description": "Add 'source.geo' GeoIP data for 'source.ip'", "field": "source.ip", "target_field": "source.geo" } }, { "user_agent": { "description": "Extract fields from 'user_agent'", "field": "user_agent" } } ] }

- To test the pipeline, click Add documents.

-

In the Documents tab, provide a sample document for testing:

[ { "_source": { "message": "212.87.37.154 - - [05/May/2099:16:21:15 +0000] \"GET /favicon.ico HTTP/1.1\" 200 3638 \"-\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36\"" } } ]

- Click Run the pipeline and verify the pipeline worked as expected.

-

If everything looks correct, close the panel, and then click Create pipeline.

You’re now ready to index the logs data to a data stream.

-

Create an index template with data stream enabled.

PUT _index_template/my-data-stream-template { "index_patterns": [ "my-data-stream*" ], "data_stream": { }, "priority": 500 }

-

Index a document with the pipeline you created.

POST my-data-stream/_doc?pipeline=my-pipeline { "message": "89.160.20.128 - - [05/May/2099:16:21:15 +0000] \"GET /favicon.ico HTTP/1.1\" 200 3638 \"-\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36\"" }

-

To verify, search the data stream to retrieve the document. The following search uses

filter_pathto return only the document source.response = client.search( index: 'my-data-stream', filter_path: 'hits.hits._source' ) puts response

GET my-data-stream/_search?filter_path=hits.hits._source

The API returns:

{ "hits": { "hits": [ { "_source": { "@timestamp": "2099-05-05T16:21:15.000Z", "http": { "request": { "referrer": "\"-\"", "method": "GET" }, "response": { "status_code": 200, "body": { "bytes": 3638 } }, "version": "1.1" }, "source": { "ip": "89.160.20.128", "geo": { "continent_name" : "Europe", "country_name" : "Sweden", "country_iso_code" : "SE", "city_name" : "Linköping", "region_iso_code" : "SE-E", "region_name" : "Östergötland County", "location" : { "lon" : 15.6167, "lat" : 58.4167 } } }, "message": "89.160.20.128 - - [05/May/2099:16:21:15 +0000] \"GET /favicon.ico HTTP/1.1\" 200 3638 \"-\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36\"", "url": { "original": "/favicon.ico" }, "user": { "name": "-", "id": "-" }, "user_agent": { "original": "\"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36\"", "os": { "name": "Mac OS X", "version": "10.11.6", "full": "Mac OS X 10.11.6" }, "name": "Chrome", "device": { "name": "Mac" }, "version": "52.0.2743.116" } } } ] } }