Data Exfiltration Detection

| Version | 2.4.1 (View all) |

| Subscription level What's this? |

Platinum |

| Developed by What's this? |

Elastic |

| Minimum Kibana version(s) | 9.0.0 8.10.1 |

The Data Exfiltration Detection (DED) package contains assets for detecting data exfiltration in network and file data. Data Exfiltration Detection package currently supports only unidirectional flows and does not yet accommodate bi-directional flows. This package requires a Platinum subscription. Please ensure that you have a Trial or Platinum level subscription installed on your cluster before proceeding. This package is licensed under Elastic License 2.0.

This package leverages event logs. Prior to using this integration, you must have Elastic Endpoint via Elastic Defend, or have equivalent tools/endpoints set up. If using Elastic Defend, Elastic Defend should be installed through Elastic Agent and collecting data from hosts. See Configure endpoint protection with Elastic Defend for more information. The transform only supports Linux and Windows. The Anomaly Detection Jobs section outlines platform support for each job.

In versions 2.1.1 and later, this package ignores data in cold and frozen data tiers to reduce heap memory usage, avoid running on outdated data, and to follow best practices.

For more detailed information refer to the following blog:

Upgrading: If upgrading from a version below v2.0.0, see the section v2.0.0 and beyond.

Add the Integration Package: Install the package via Management > Integrations > Add Data Exfiltration Detection. Configure the integration name and agent policy. Click Save and Continue. (Note that this integration does not rely on an agent, and can be assigned to a policy without an agent.)

Install assets: Install the assets by clicking Settings > Install Data Exfiltration Detection assets.

Check the health of the transform: The transform is scheduled to run every 30 minutes. This transform creates the index

ml_network_ded-<VERSION>. To check the health of the transform go to Management > Stack Management > Data > Transforms underlogs-ded.pivot_transform-default-<FLEET-TRANSFORM-VERSION>. Follow the instructions under the headerCustomize Data Exfiltration Detection Transformbelow to adjust filters based on your environment's needs.Create data views for anomaly detection jobs: This package contains anomaly detection jobs that work on network events (e.g.

logs-endpoint.events.network-*) and file events (logs-endpoint.events.file-*) respectively. See the Anomaly Detection Jobs section below for more details. Tip: If you only have one of the above data sources (network or file), you can only follow the steps pertaining to that index. A separate designated index (ml_network_ded.all) collects network logs from a transform. Before enabling the anomaly detection jobs, create a data view with both index patterns.- Go to Stack Management > Kibana > Data Views and click Create data view.

- Enter the name of your respective index patterns in the Index pattern box, i.e.,

logs-endpoint.events.file-*,logs-endpoint.events.network-*(depending which one(s) you have),ml_network_ded.all, and copy the same in the Name field. - Select

@timestampunder the Timestamp field and click on Save data view to Kibana. - Use the new data view (

logs-endpoint.events.network-*,logs-endpoint.events.file-*,ml_network_ded.all) to create anomaly detection jobs for this package.

Add preconfigured anomaly detection jobs: In Stack Management -> Anomaly Detection Jobs, you will see Select data view or saved search. Select the data view created in the previous step. Then under

Use preconfigured jobsyou will see Data Exfiltration Detection. If you do not see this card, events must be ingested from a source that matches the query specified in the ded-ml file, such as Elastic Defend. When you select the card, you will see pre-configured anomaly detection jobs that you can create depending on what makes the most sense for your environment. If you are using Elastic Defend to collect events, file events are inlogs-endpoint.events.file-*and network events inlogs-endpoint.events.network-*. If you are only collecting file or network events, select only the relevant jobs at this step.Data view configuration for Dashboards: For the dashboard to work as expected, the following settings need to be configured in Kibana.

- You have started the above anomaly detection jobs.

- You have read access to

.ml-anomalies-sharedindex or are assigned themachine_learning_userrole. For more information on roles, please refer to Built-in roles in Elastic. Please be aware that a user who has access to the underlying machine learning results indices can see the results of all jobs in all spaces. Be mindful of granting permissions if you use Kibana spaces to control which users can see which machine learning results. For more information on machine learning privileges, refer to setup-privileges. - After enabling the jobs, go to Management > Stack Management > Kibana > Data Views. Click on Create data view with the following settings:

- Name:

.ml-anomalies-shared - Index pattern :

.ml-anomalies-shared - Select Show Advanced settings enable Allow hidden and system indices

- Custom data view ID:

.ml-anomalies-shared

- Name:

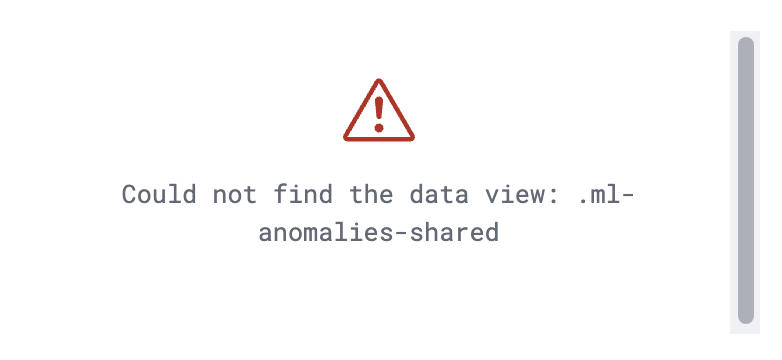

Warning: When creating the data views for the dashboards, ensure that the

Custom data view IDis set to the value specified above and is not left empty. Omitting or misconfiguring this field may result in broken visualizations, as illustrated by the error message below.

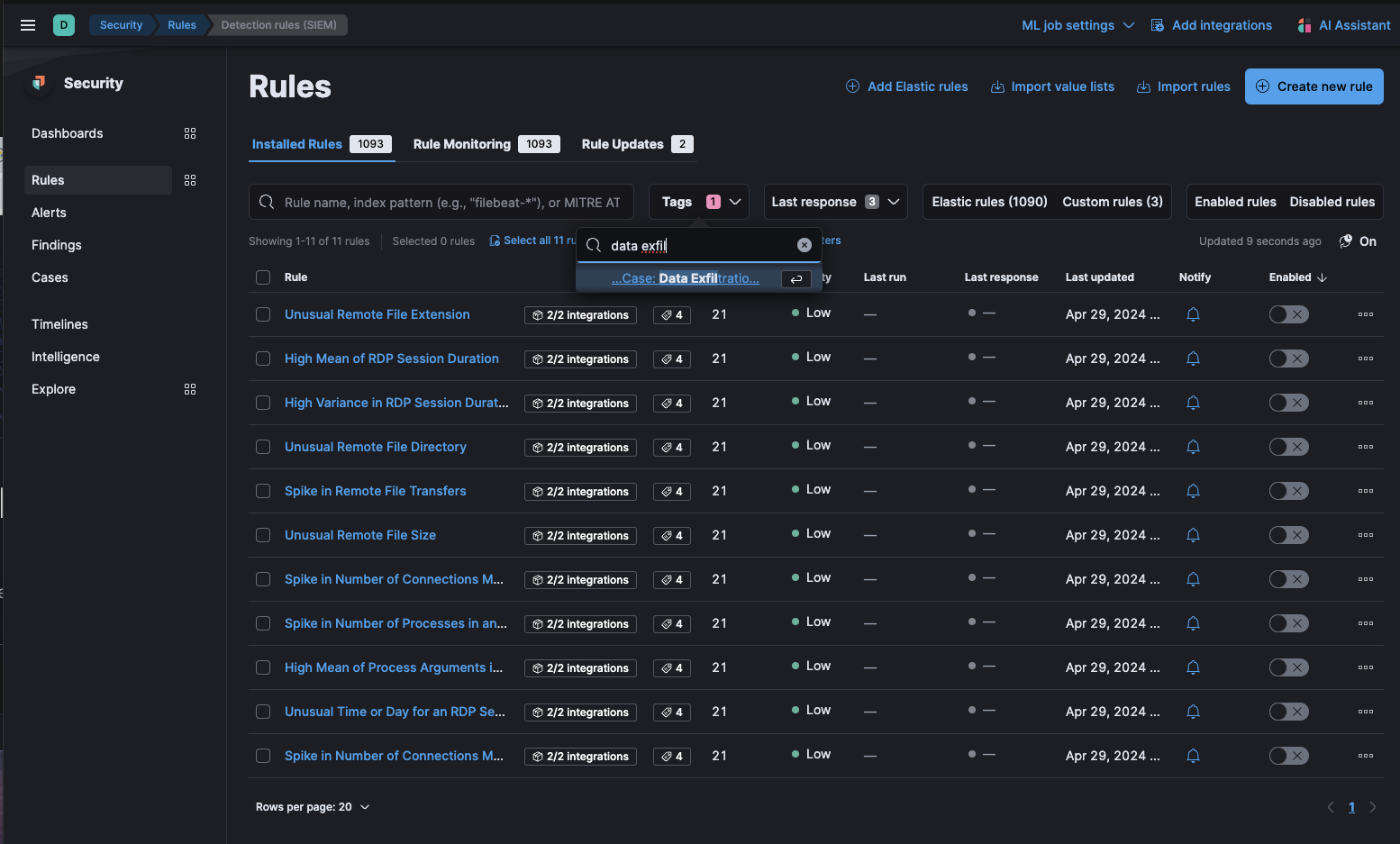

Enable detection rules: You can also enable detection rules to alert on Data Exfiltration activity in your environment, based on anomalies flagged by the above ML jobs. As of version 2.0.0 of this package, these rules are available as part of the Detection Engine, and can be found using the tag

Use Case: Data Exfiltration Detection. See this documentation for more information on importing and enabling the rules.

In Security > Rules, filtering with the “Use Case: Data Exfiltration Detection” tag

In Security > Rules, filtering with the “Use Case: Data Exfiltration Detection” tag

To inspect the installed assets, you can navigate to Stack Management > Data > Transforms.

| Transform name | Purpose | Source index | Destination index | Alias |

|---|---|---|---|---|

| ded.pivot_transform | Collects network logs from your environment | logs-* | ml_network_ded-[version] | ml_network_ded.all |

The transform applies only to network data and does not currently support macOS network logs.

When querying the destination index (ml_network_ded-<VERSION>) for network logs, we advise using the alias for the destination index (ml_network_ded.all). In the event that the underlying package is upgraded, the alias will aid in maintaining the previous findings.

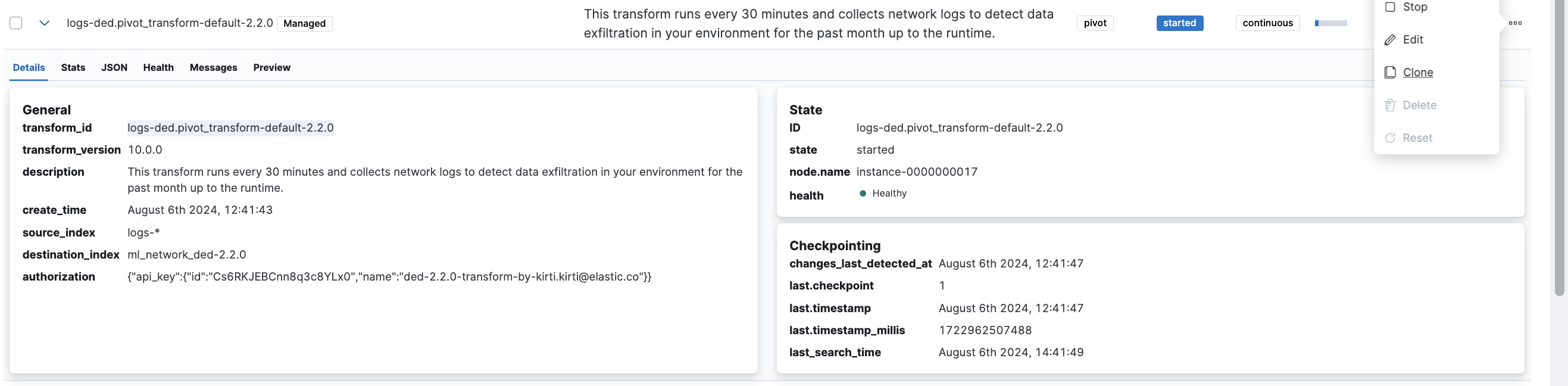

To customize filters in the Data Exfiltration Detection transform, follow the below steps. You can use these instructions to update basic settings or to update filters for fields such as process.name, source.ip, destination.ip, and others.

- To update settings such as retention policy, frequency, or destination configuration, stop the transform, click Edit from the Actions bar, make the required changes, and start the transform again.

- To update the query filters, go to Stack Management > Data > Transforms >

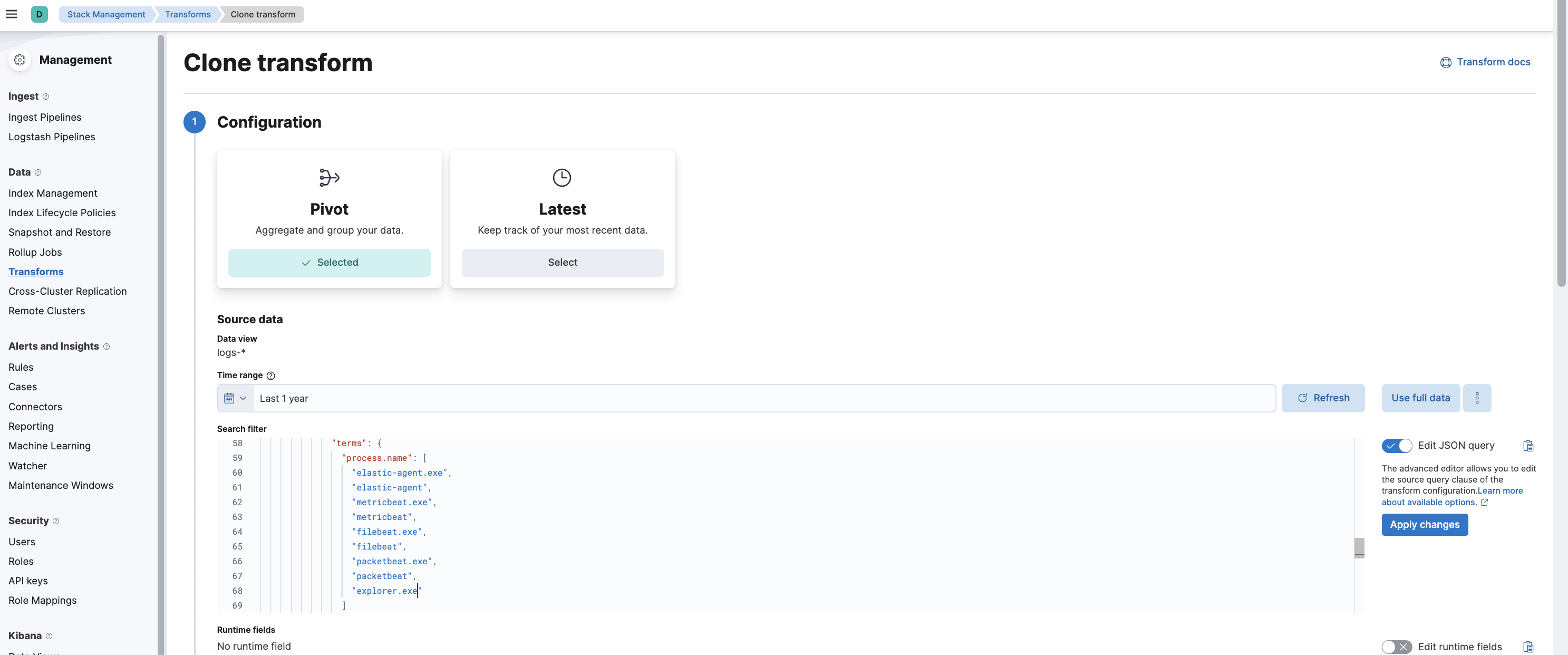

logs-ded.pivot_transform-default-<FLEET-TRANSFORM-VERSION>. - Click on the Actions bar at the far right of the transform and select the Clone option.

- In the new Clone transform window, go to the Search filter and update any field values you want to add or remove. Click on the Apply changes button on the right side to save these changes. Note: The image below shows an example of filtering a new

process.nameasexplorer.exe. You can follow a similar example and update the field value list based on your environment to help reduce noise and potential false positives.

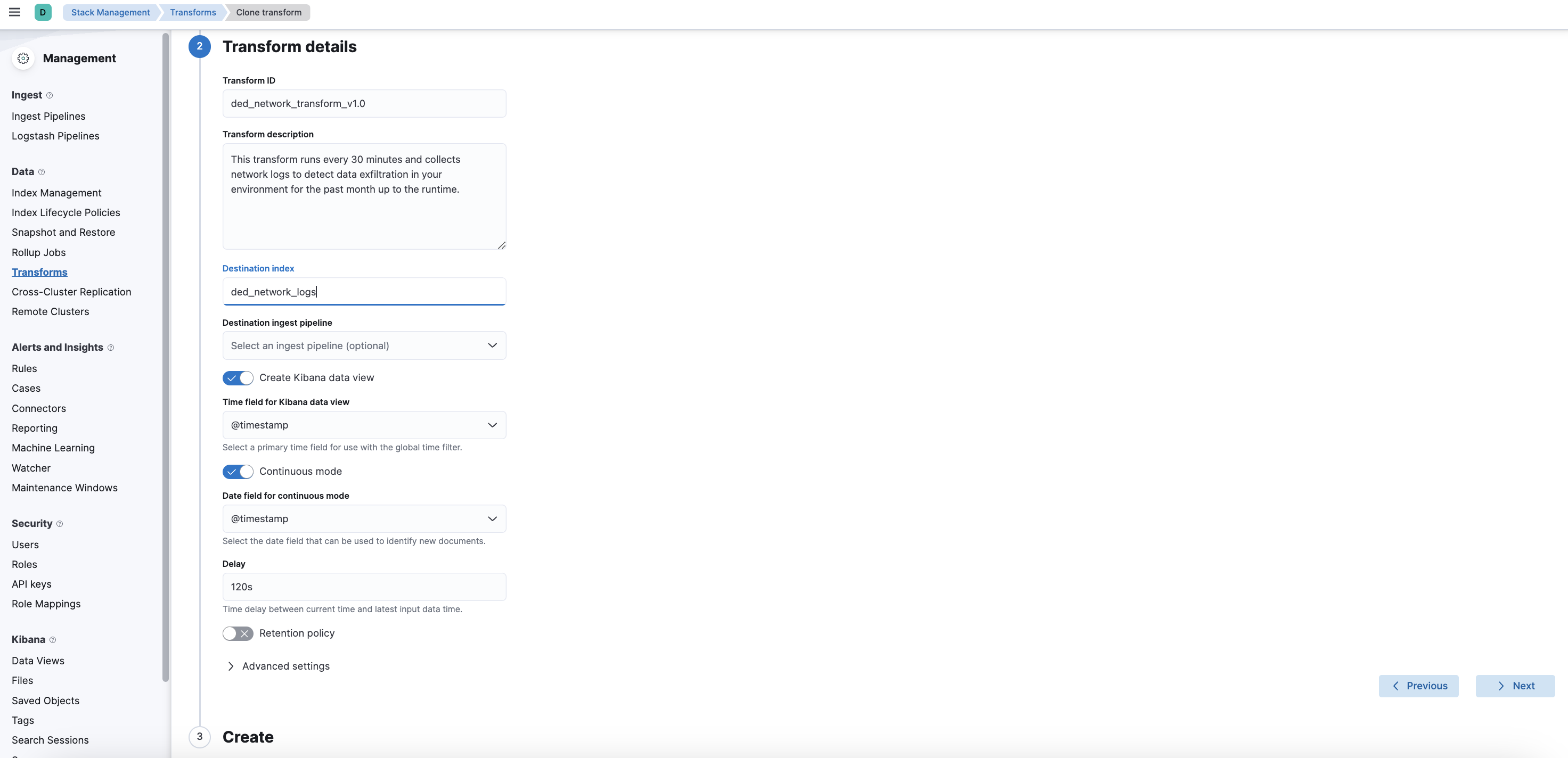

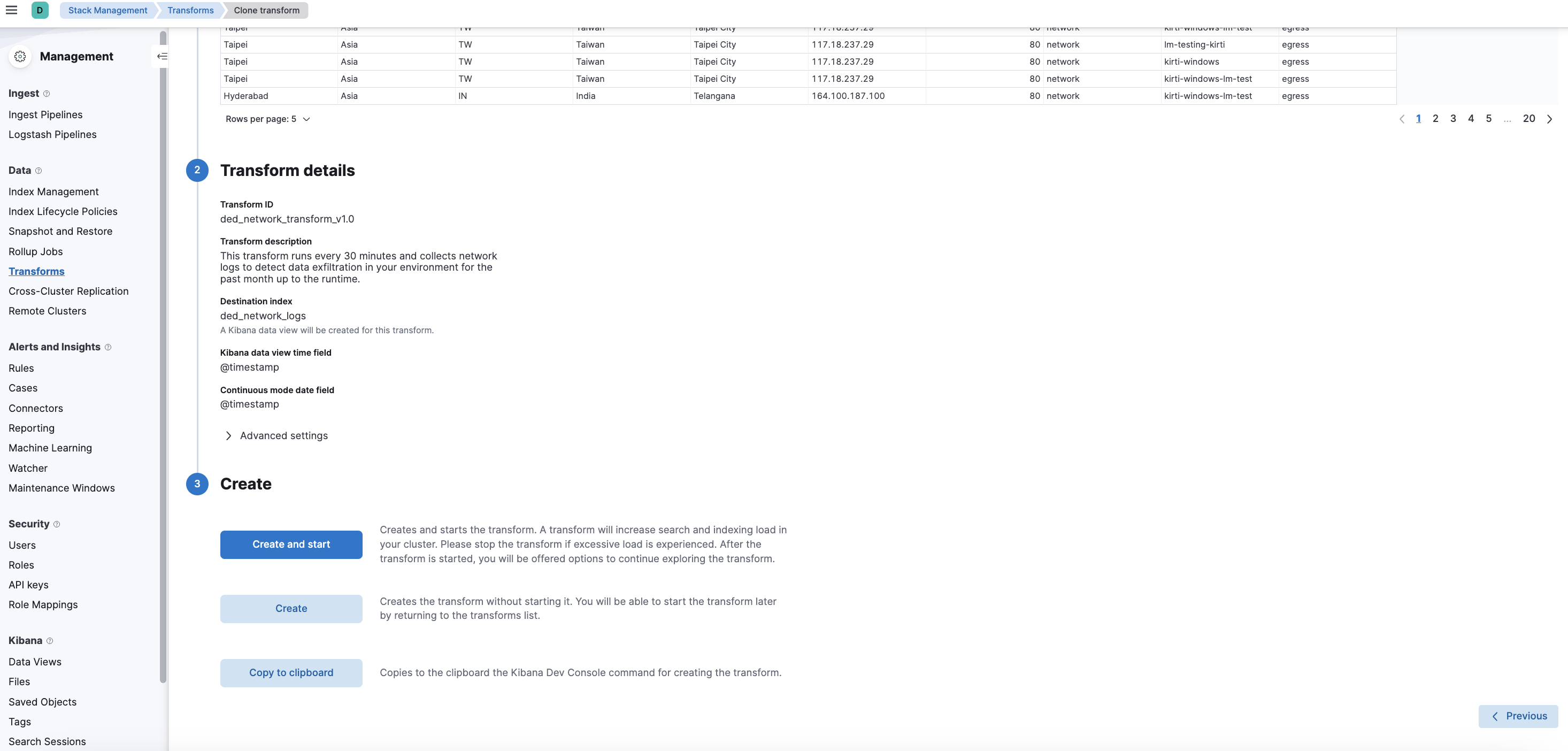

- Scroll down and select the Next button at the bottom right. Under the Transform details section, enter a new Transform ID and Destination index of your choice, then click on the Next button.

- Lastly, select the Create and Start option. Your updated transform will now start collecting data. Note: Do not forget to update your data view based on the new Destination index you have just created.

After the data view for the dashboard is configured, the Data Exfiltration Detection Dashboard is available under Analytics > Dashboard. This dashboard gives an overview of anomalies triggered for the data exfiltration detection package.

| Job | Description | Supported Platform | Event Category |

|---|---|---|---|

| ded_high_sent_bytes_destination_geo_country_iso_code | Detects data exfiltration to an unusual geo-location (by country iso code). | Linux, Windows | network |

| ded_high_sent_bytes_destination_ip | Detects data exfiltration to an unusual geo-location (by IP address). | Linux, Windows | network |

| ded_high_sent_bytes_destination_port | Detects data exfiltration to an unusual destination port. | Linux, Windows | network |

| ded_high_sent_bytes_destination_region_name | Detects data exfiltration to an unusual geo-location (by region name). | Linux, Windows | network |

| ded_high_bytes_written_to_external_device | Detects data exfiltration activity by identifying high bytes written to an external device. | Windows | file |

| ded_rare_process_writing_to_external_device | Detects data exfiltration activity by identifying a writing event started by a rare process to an external device. | Windows | file |

| ded_high_bytes_written_to_external_device_airdrop | Detects data exfiltration activity by identifying high bytes written to an external device via Airdrop. | macOS | file |

To customize the datafeed query and other settings such as model memory limit, frequency, query delay, bucket span and influencers for the Data Exfiltration Detection ML jobs, follow the steps below.

- To update the datafeed query, stop the datafeed and select Edit job from the Actions menu.

- In the Edit job window, navigate to the Datafeed section and update the query filters. You can add or remove field values to help reduce noise and false positives based on your environment.

- You may also update the model memory limit if your environment has high data volume or if the job requires additional resources. Go to the Job details section and update the Model memory limit and hit Save. For more information on resizing ML jobs, refer to the documentation.

- In order to do more advanced changes to your job, clone the job by selecting Clone job from the Actions menu.

- In the cloned job, you can update datafeed settings such as Frequency and Query delay, which help control how often data is analyzed and account for ingestion delays.

- You can also modify the job configuration by adjusting the Bucket span and by adding or removing Influencers to improve anomaly attribution.

- Finally, assign a new Job ID, and click on Create job, and start the datafeed to apply the updated settings.

v2.0.0 of the package introduces breaking changes, namely deprecating detection rules from the package. To continue receiving updates to Data Exfiltration Detection, we recommend upgrading to v2.0.0 after doing the following:

- Delete existing ML jobs: Navigate to Stack Management -> Anomaly Detection Jobs and delete jobs corresponding to the following IDs:

- high-sent-bytes-destination-geo-country_iso_code

- high-sent-bytes-destination-ip

- high-sent-bytes-destination-port

- high-sent-bytes-destination-region_name

- high-bytes-written-to-external-device

- rare-process-writing-to-external-device

- high-bytes-written-to-external-device-airdrop

Depending on the version of the package you're using, you might also be able to search for the above jobs using the group data_exfiltration.

- Uninstall existing rules associated with this package: Navigate to Security > Rules and delete the following rules:

- Potential Data Exfiltration Activity to an Unusual ISO Code

- Potential Data Exfiltration Activity to an Unusual Region

- Potential Data Exfiltration Activity to an Unusual IP Address

- Potential Data Exfiltration Activity to an Unusual Destination Port

- Spike in Bytes Sent to an External Device

- Spike in Bytes Sent to an External Device via Airdrop

- Unusual Process Writing Data to an External Device

Depending on the version of the package you're using, you might also be able to search for the above rules using the tag Data Exfiltration.

- Upgrade the Data Exfiltration Detection package to v2.0.0 using the steps here

- Install the new rules as described in the Enable detection rules section below.

Usage in production requires that you have a license key that permits use of machine learning features.

This integration includes one or more Kibana dashboards that visualizes the data collected by the integration. The screenshots below illustrate how the ingested data is displayed.

Changelog

| Version | Details | Minimum Kibana version |

|---|---|---|

| 2.4.1 | Enhancement (View pull request) Update package docs with customization steps for ML jobs and transforms |

9.0.0 8.10.1 |

| 2.4.0 | Bug fix (View pull request) Add a transform filter to exclude cold and frozen tier data |

9.0.0 8.10.1 |

| 2.3.5 | Enhancement (View pull request) Update documentation on configuring data view for dashboards |

9.0.0 8.10.1 |

| 2.3.4 | Enhancement (View pull request) Update documentation on network and file data sources |

9.0.0 8.10.1 |

| 2.3.3 | Enhancement (View pull request) Update platform support docs |

9.0.0 8.10.1 |

| 2.3.2 | Enhancement (View pull request) Clarify supported OS for Data Exfiltration Detection jobs |

9.0.0 8.10.1 |

| 2.3.1 | Bug fix (View pull request) Change to transform mappings to ECS. Ensured that source.bytes is cast to a long. |

9.0.0 8.10.1 |

| 2.3.0 | Enhancement (View pull request) Add support for Kibana 9.0.0 |

9.0.0 8.10.1 |

| 2.2.1 | Enhancement (View pull request) Add agent policy documentation |

8.10.1 |

| 2.2.0 | Enhancement (View pull request) Add transform to Data Exfiltration Detection package |

8.10.1 |

| 2.1.2 | Enhancement (View pull request) Improve package installation documentation |

8.9.0 |

| 2.1.1 | Enhancement (View pull request) Add query settings to ignore frozen and cold data tiers |

8.9.0 |

| 2.1.0 | Enhancement (View pull request) Add serverless support. |

8.9.0 |

| 2.0.0 | Enhancement (View pull request) Removing detection rules from the package |

8.9.0 |

| 1.0.3 | Enhancement (View pull request) Added security rules and anomaly detection jobs to detect exfiltration to external devices. |

8.5.0 |

| 1.0.2 | Enhancement (View pull request) Add the Advanced Analytics (UEBA) subcategory |

8.5.0 |

| 1.0.1 | Enhancement (View pull request) Added categories and/or subcategories. |

8.5.0 |

| 1.0.0 | Enhancement (View pull request) Added dashboard and changed the datafeed of anomaly detection jobs |

8.5.0 |

| 0.0.2 | Enhancement (View pull request) Move package to GA, change the package title, change ML job groups and detection rule tags |

8.0.0 |

| 0.0.1 | Enhancement (View pull request) Initial release of the package |

— |